Streamlining Your Kubernetes Development Environment: A Comprehensive Guide

Kubernetes has revolutionized how modern applications are built, deployed, and scaled. However, due to its complexity, managing a Kubernetes development environment can sometimes feel overwhelming for developers. Utilizing a cloud environment can simplify Kubernetes development by providing better scalability, manageability of dependencies, and a more consistent development experience across various cloud providers. From setting up containers to ensuring consistency between development and production, there are several challenges to overcome. That’s why having a streamlined and efficient Kubernetes development environment is crucial for any development team. This comprehensive guide will explore everything you need to know about setting up and optimizing a Kubernetes development environment. Whether you’re new to Kubernetes or looking to refine your existing setup, this guide will provide you with practical insights, tools, and best practices to help you quickly develop, test, and deploy Kubernetes applications. Let’s dive into the essential components and strategies that will make your Kubernetes development experience more productive and seamless.

Understanding Kubernetes Development Environments

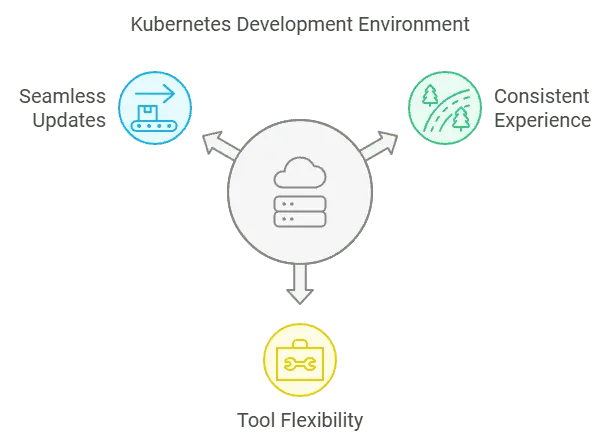

A Kubernetes development environment is a specialized setup that empowers developers to work efficiently with Kubernetes. It includes the essential tools, configurations, and processes needed to build, deploy, test, and debug applications running inside containers on a Kubernetes cluster. The primary advantage of a Kubernetes development environment is that it provides a consistent development experience. Whether developers are working locally, in the cloud, or on different team setups, the environment ensures a uniform experience across all stages of development. This consistency eliminates many headaches associated with discrepancies between local development and production environments, enabling smoother transitions and reducing unexpected errors. In such an environment, developers can install and configure a variety of tools and frameworks, from container runtimes to security policies and monitoring systems. This flexibility ensures that the environment can be tailored to meet a project’s unique needs while maintaining security and up-to-date software versions. Additionally, the ability to update tools and configurations seamlessly as the project evolves ensures that your development workflow remains efficient and aligned with current best practices in Kubernetes development.

A Kubernetes development environment is a specialized setup that empowers developers to work efficiently with Kubernetes. It includes the essential tools, configurations, and processes needed to build, deploy, test, and debug applications running inside containers on a Kubernetes cluster. The primary advantage of a Kubernetes development environment is that it provides a consistent development experience. Whether developers are working locally, in the cloud, or on different team setups, the environment ensures a uniform experience across all stages of development. This consistency eliminates many headaches associated with discrepancies between local development and production environments, enabling smoother transitions and reducing unexpected errors. In such an environment, developers can install and configure a variety of tools and frameworks, from container runtimes to security policies and monitoring systems. This flexibility ensures that the environment can be tailored to meet a project’s unique needs while maintaining security and up-to-date software versions. Additionally, the ability to update tools and configurations seamlessly as the project evolves ensures that your development workflow remains efficient and aligned with current best practices in Kubernetes development.

Setting Up a Local Kubernetes Development Environment

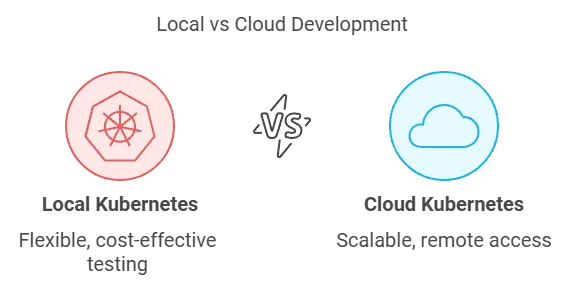

Running a Kubernetes development environment locally offers significant flexibility and control, as it uses your personal computer’s resources and computing power. However, developers often face challenges when replicating Kubernetes-based applications in a local dev environment. It allows developers to build, test, and run containerized microservices without relying on cloud infrastructure, making it an ideal choice for early-stage development and testing.

Running a Kubernetes development environment locally offers significant flexibility and control, as it uses your personal computer’s resources and computing power. However, developers often face challenges when replicating Kubernetes-based applications in a local dev environment. It allows developers to build, test, and run containerized microservices without relying on cloud infrastructure, making it an ideal choice for early-stage development and testing.

Benefits of a Local Kubernetes Environment

A local cluster provides a powerful way to simulate Kubernetes clusters and workflows. It enables developers to work within a familiar ecosystem, rapidly iterate on code, and troubleshoot in real time—all without incurring the costs or delays associated with cloud-based environments. This setup is beneficial for:

- Faster Feedback Loops: Since everything runs locally, developers can quickly deploy and test changes, improving the development cycle’s overall efficiency.

- Cost Efficiency: By avoiding cloud infrastructure during early development stages, teams can save on resources and operational costs.

Local environments also enable developers to work offline, making it convenient for testing on the go or in areas with limited internet access or connectivity.

Popular Tools for Running Local Kubernetes Clusters

Several tools allow developers to easily create local Kubernetes clusters, each with strengths depending on the use case. Some popular tools include:

- Minikube: One of the most well-known options, Minikube provides a simple way to run a single-node Kubernetes cluster on a local machine. It is compatible with multiple operating systems and supports various container runtimes.

- Kind (Kubernetes in Docker): Kind runs Kubernetes clusters inside Docker containers. It’s lightweight and a great option for running multiple clusters or testing different versions of Kubernetes.

- MicroK8s: Backed by Canonical, MicroK8s is a lightweight, production-grade Kubernetes that runs on Linux and other platforms. It’s ideal for developers who want a zero-configuration, low-overhead Kubernetes solution.

- K3s: A lightweight version of Kubernetes designed for resource-constrained environments, K3s is often used for IoT or edge computing. It is also popular for developers looking to run Kubernetes on a smaller footprint.

Each tool abstracts much of the complexity of setting up a Kubernetes cluster or local container, allowing developers to focus on building their applications rather than configuring infrastructure. With these tools, you can deploy multiple clusters or microservices locally in isolated containers, simulating real-world production environments for testing purposes. Using a local Kubernetes setup, you can confidently develop and test your applications before deploying them to production, ensuring a smoother transition and fewer issues.

Managing Multiple Environments in Kubernetes

Managing multiple Kubernetes environments—such as development, staging, and production—comes with unique challenges. Utilizing multiple cloud providers can deliver a consistent development experience across various platforms like AWS, Azure, and Google. Platform engineers must ensure that each environment operates smoothly while maintaining consistency and preventing conflicts that can arise during deployment, maintenance, and scaling. Understanding and addressing these challenges is crucial to ensure stability, security, and compliance across all environments.

Managing multiple Kubernetes environments—such as development, staging, and production—comes with unique challenges. Utilizing multiple cloud providers can deliver a consistent development experience across various platforms like AWS, Azure, and Google. Platform engineers must ensure that each environment operates smoothly while maintaining consistency and preventing conflicts that can arise during deployment, maintenance, and scaling. Understanding and addressing these challenges is crucial to ensure stability, security, and compliance across all environments.

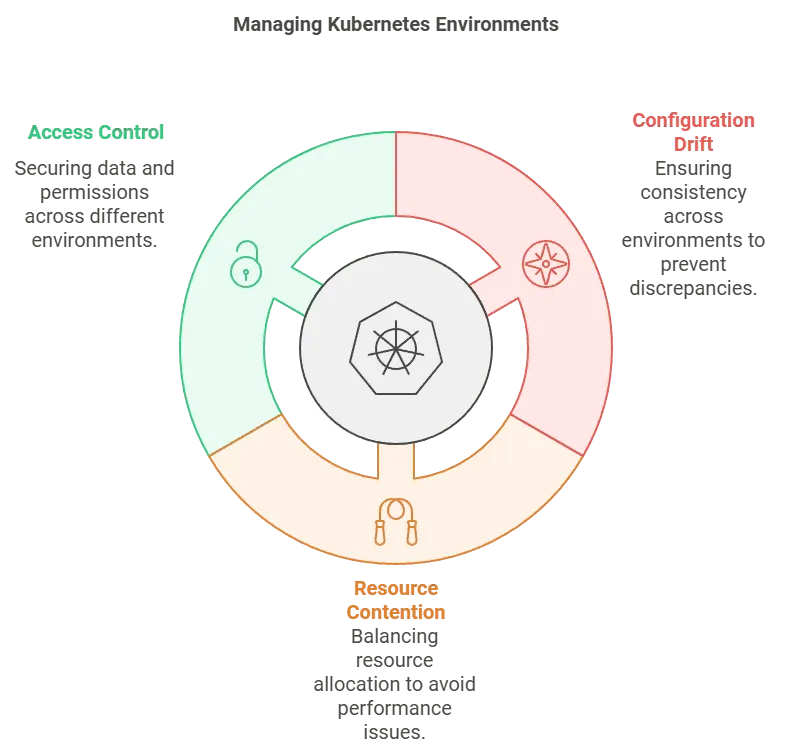

Challenges of Configuration Drift

One of the most common challenges when managing multiple Kubernetes environments is configuration drift. This occurs when discrepancies arise between environments due to manual changes, misconfigurations, or inconsistencies introduced during deployment. Even minor differences between configuration files or resource definitions can lead to bugs and unexpected behavior in production. To mitigate configuration drift:

- Version control your configurations: Using Infrastructure-as-Code (IaC) tools like Helm, Kustomize, or GitOps practices can help keep configuration changes consistent across environments.

- Automate deployments: Minimize manual intervention by automating the deployment process with CI/CD pipelines to ensure that changes are applied uniformly.

Preventing configuration drift ensures that environments stay in sync, reducing the likelihood of unexpected issues when transitioning from development to production.

Addressing Resource Contention and Access Control

Resource contention can become a significant concern when managing multiple environments on a single Kubernetes cluster. Development, staging, and production environments on local clusters may compete for the same CPU, memory, or storage resources, leading to performance degradation and system instability. To handle resource contention effectively:

- Namespace isolation: Kubernetes namespaces can be used to separate resources for different environments logically. This helps ensure resource consumption is limited to specific workloads within each environment.

- Resource quotas and limits: Enforcing resource quotas ensures that no single environment can monopolize shared cluster resources, maintaining balanced performance across all environments.

Additionally, managing access control and securing sensitive data across environments can be complex. Each environment may require different network access permissions and policies for developers, testers, and operators. To manage access control:

- Role-Based Access Control (RBAC): Implement RBAC to define precise roles and permissions for users and services across different environments.

- Secrets management: Securely manage sensitive information such as API keys and database credentials using Kubernetes Secrets or third-party vault tools like HashiCorp Vault.

Consistent Deployments and Compliance Across Environments

Another challenge is ensuring the consistent and controlled deployment of application versions across environments. Each environment may require different application configurations, and managing rollbacks in case of issues is critical to maintaining operational stability. To manage deployments consistently:

- Blue-green or canary deployments: These strategies allow you to incrementally roll out changes to production environments while maintaining older versions for a smooth rollback if needed.

- Automated testing: Integrating automated testing in CI/CD pipelines ensures that applications are thoroughly tested across multiple environments before reaching production.

Lastly, meeting compliance requirements across different environments can be challenging, especially for highly regulated industries. Different environments might require varying levels of auditing, logging, and data encryption.

- Audit logging and monitoring: Implement audit logs and monitoring solutions to maintain visibility into what changes are made and when they occur, ensuring compliance with industry regulations.

- Environment-specific compliance policies: Tailor compliance controls and security policies to fit the specific needs of each environment while maintaining central oversight.

Managing multiple Kubernetes environments requires careful planning and using automated tools and best practices to ensure consistency, security, and compliance across all stages of the application lifecycle.

Kubernetes Cluster Management

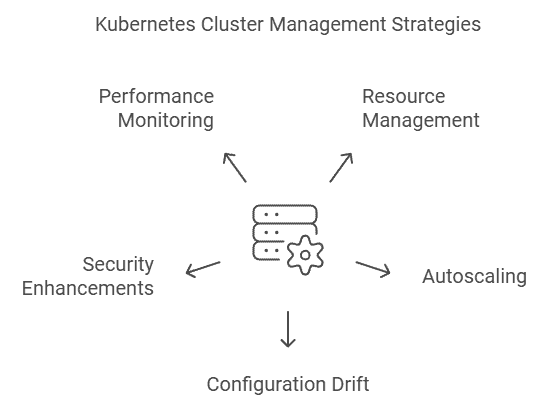

Managing Kubernetes clusters is crucial for maintaining smooth operation across multiple environments, such as development, staging, and production clusters. This involves addressing common challenges like resource contention, configuration drift, and security concerns while optimizing performance through resource management strategies.

Managing Kubernetes clusters is crucial for maintaining smooth operation across multiple environments, such as development, staging, and production clusters. This involves addressing common challenges like resource contention, configuration drift, and security concerns while optimizing performance through resource management strategies.

Resource Management and Autoscaling

One key strategy is setting resource limits for each environment within the cluster to prevent resource contention. This ensures CPU, memory, and storage are utilized efficiently, especially in multi-environment setups. In addition to resource limits, implementing autoscaling allows Kubernetes to adjust the number of application replicas based on workload requirements. Autoscaling can be handled through:

- Horizontal Pod Autoscaling (HPA), which scales pods based on resource usage.

- Cluster Autoscaler, which adjusts the size of the cluster by adding or removing nodes.

Together, these tools help manage peak demand without over-provisioning resources, maintaining a balance between cost and performance. Additionally, addressing configuration drift by automating deployments and using version control tools like Helm or GitOps helps ensure consistency across environments. Role-Based Access Control (RBAC) and secure management of sensitive data using Kubernetes Secrets further enhance security. Monitoring tools like Prometheus or Grafana provide insights into cluster performance, enabling timely intervention to maintain stability across all environments. Platform engineers can maintain a scalable, secure, and efficient Kubernetes cluster by employing these strategies.

Development Environment vs. Production Environment

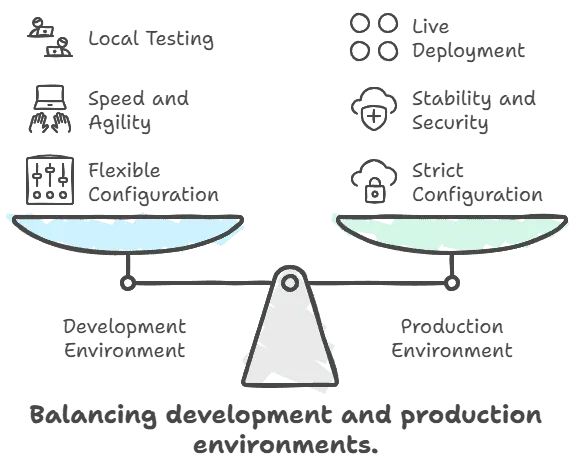

In software development, distinguishing between development and production environments is essential for ensuring a smooth and error-free application lifecycle. Both environments serve different purposes and are configured accordingly to meet their specific needs.

In software development, distinguishing between development and production environments is essential for ensuring a smooth and error-free application lifecycle. Both environments serve different purposes and are configured accordingly to meet their specific needs.

Development Environment

A development environment is primarily used for building, testing, and debugging applications. It is a safe space where developers can experiment with code, test new features, and troubleshoot issues without affecting end users. The configuration in a development environment is often more flexible and less stringent than in production, as the primary focus is speed and agility to support rapid iteration. For Kubernetes, local development environments can simulate a production setup on a developer’s local machine. Tools like Minikube or Kind allow developers to spin up local Kubernetes clusters, providing an isolated environment to run containerized applications and perform testing before pushing changes to a remote cluster.

Production Environment

In contrast, a production environment is where the application runs in a live, user-facing setting. This environment is optimized for stability, performance, and security, as any arising issues can directly affect users. Configuration in production environments is often stricter to ensure high availability, data integrity, and adherence to compliance standards. While the development and production environments may differ in their configurations, local Kubernetes environments enable developers to replicate production settings for testing closely. This approach helps catch potential issues early, allowing for smoother deployments and fewer surprises when applications go live on a remote Kubernetes cluster. By mirroring production conditions locally, developers can better ensure that their applications function as expected in real-world scenarios.

Best Practices for Kubernetes Development

When it comes to Kubernetes development, adhering to best practices can significantly enhance your workflow and ensure a smooth, efficient process. Here are some essential practices to consider:

- Use a Consistent Development Environment: Consistency is crucial for reducing errors and improving collaboration among team members. Whether you opt for a local Kubernetes development environment or a cloud-based setup, ensuring that everyone on the team works within the same environment can streamline development processes and minimize discrepancies. Tools like Minikube or Kind can help create a uniform local Kubernetes environment, while cloud-based solutions offer scalability and remote access.

- Use Version Control: Version control is indispensable in managing code changes and maintaining the integrity of your project. Tools like Git allow you to track changes, collaborate with team members, and revert to previous versions if needed. This practice is especially important in Kubernetes development, where configuration files and resource definitions are frequently updated.

- Use a CI/CD Pipeline: Implementing a Continuous Integration/Continuous Deployment (CI/CD) pipeline can automate the build, test, and deployment processes. This automation reduces the risk of human error, speeds up the development cycle, and ensures that code changes are consistently tested and deployed. Tools like Jenkins, GitLab CI, and CircleCI can help set up reliable CI/CD pipelines for your Kubernetes environment.

- Use Kubernetes Resources Efficiently: Efficient use of Kubernetes resources such as pods, services, and deployments are essential for optimizing performance and avoiding resource wastage. Properly configuring resource requests and limits, using autoscaling features, and regularly monitoring resource usage can help maintain a balanced and efficient Kubernetes environment.

- Monitor and Log: Monitoring and logging are critical for identifying and troubleshooting issues in your Kubernetes environment. Tools like Prometheus and Grafana provide real-time insights into the health and performance of your clusters while logging solutions like Elasticsearch, Fluentd, and Kibana (EFK) stack help capture and analyze log data. Regular monitoring and logging ensure you can quickly detect and resolve issues before they impact your applications.

- Use Security Best Practices: Security should be a top priority in any Kubernetes development project. Implementing network policies, managing secrets securely, and using Role-Based Access Control (RBAC) are essential practices for protecting your environment. Regularly updating your Kubernetes components and applying security patches also help mitigate vulnerabilities.

- Test Thoroughly: Comprehensive testing is vital for ensuring the reliability and performance of your applications. Kubernetes testing frameworks like kube-test and integration with CI/CD pipelines can help automate and streamline the testing process. Thorough testing ensures that your applications are production-ready and can handle real-world scenarios.

Following these best practices, you can create a robust and efficient Kubernetes development environment that supports rapid iteration, collaboration, and high-quality application delivery.

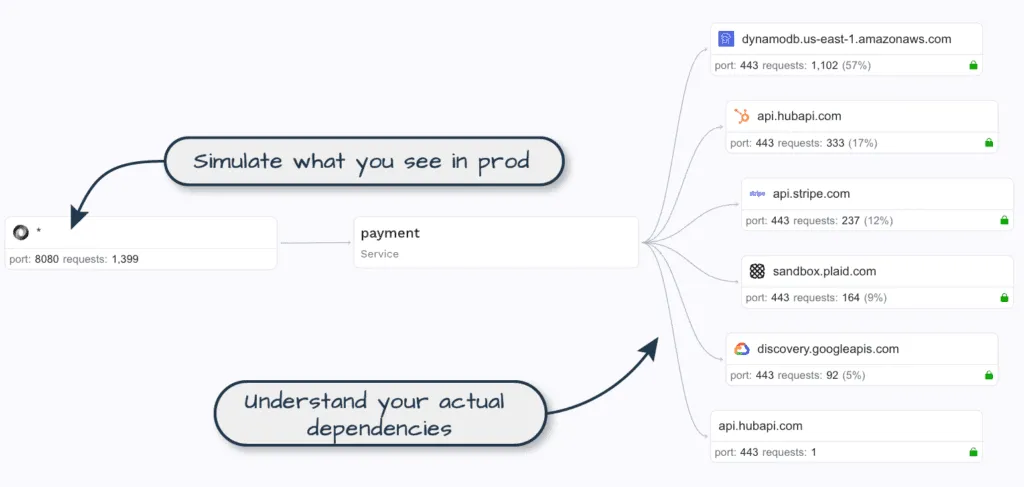

Using Speedscale to Efficiently Create Local Kubernetes Development Environments

Speedscale is a powerful tool that can significantly enhance the creation and management of local Kubernetes development cluster environments. It allows developers to simulate production traffic and workloads in a local setup, helping to identify performance bottlenecks and issues before they occur in a live environment. With Speedscale, you can capture real production traffic, replay it in your local Kubernetes cluster, and observe your application’s behavior under real-world conditions. This simulation capability makes it easier to test and optimize applications during development, ensuring they are production-ready. By using Speedscale in your local Kubernetes development environment, you can replicate production-like scenarios, debug potential issues, and validate application performance, all without needing access to a remote production cluster first. This leads to faster iteration cycles and more efficient development, ultimately reducing the time and cost associated with finding and fixing issues after deployment.

Speedscale is a powerful tool that can significantly enhance the creation and management of local Kubernetes development cluster environments. It allows developers to simulate production traffic and workloads in a local setup, helping to identify performance bottlenecks and issues before they occur in a live environment. With Speedscale, you can capture real production traffic, replay it in your local Kubernetes cluster, and observe your application’s behavior under real-world conditions. This simulation capability makes it easier to test and optimize applications during development, ensuring they are production-ready. By using Speedscale in your local Kubernetes development environment, you can replicate production-like scenarios, debug potential issues, and validate application performance, all without needing access to a remote production cluster first. This leads to faster iteration cycles and more efficient development, ultimately reducing the time and cost associated with finding and fixing issues after deployment.

Conclusion

Kubernetes local development environments enable developers to build, test, and deploy applications efficiently within Kubernetes clusters. By setting up a local Kubernetes development environment, developers can replicate production-like conditions on their local machines, allowing for thorough testing and debugging before moving to a live environment. This setup provides flexibility and consistency, ensuring smoother transitions between development and production. Effectively managing multiple environments within Kubernetes requires careful planning and adopting best practices. This includes setting resource limits, implementing security protocols, and using tools like GitOps and CD pipelines to maintain consistency across environments. Additionally, monitoring and centralized management systems help streamline operations, reduce manual errors, and optimize performance.