“How do we treat these 3 things as a unit?” We set out to start a company, and I wanted to address the problem of the triple threat: testing, dependencies and data.

As a presales engineer, I was constantly surprised by how “wild west” software delivery could be. I had the opportunity to visit a myriad of companies large and small and observe how they worked. Most companies didn’t intend for their application delivery teams to get that way. I could empathize. Change management is hard. Conway’s Law is real. As well as the struggle between Center of _ (fill in the blank) vs organic “use what you like” per team. Sound familiar?

You can take comfort in that everyone generally has the same problems. If I had a nickel for every time someone said “we’re different,” yet I could tell you how your story ends… Sure, some verticals truly are unique. But the big rocks are the same.

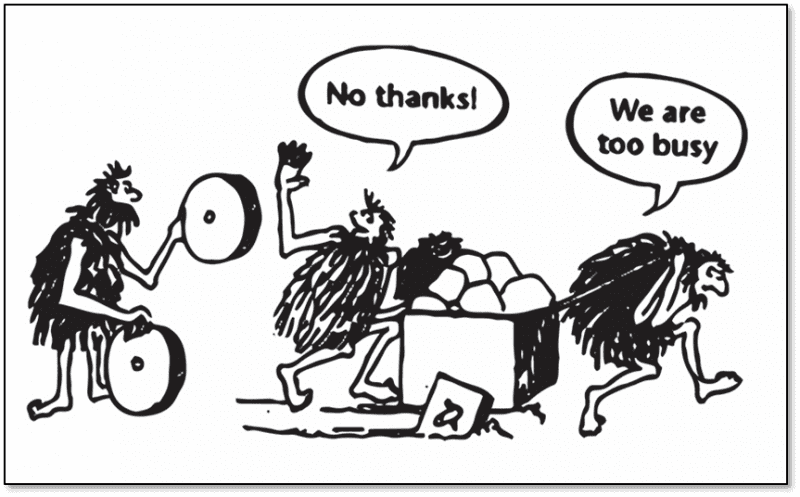

During my 10 years in the DevOps tools and digital transformation consulting space, only a handful of companies stood out for truly streamlined software delivery methodology. While their layout organizationally, or how they empowered the right change agents, or how long it took for them to get there varied – one thing was constant. They invested the time and energy to develop automation, SOP and self-service to neutralize the Triple Threat. A LOT of time and energy. It usually took 2 years or so. It’s no wonder this gets ignored in favor of new features. We’ve short circuited this timeline down to about 15 minutes.

I also observed many companies getting discouraged partway through addressing the Triple Threat. Enterprises would usually attack Test Automation first. The promise of tests that you write once and use anywhere were so prominent, millions of dollars were poured into automation frameworks and defect management suites. The problem was, these tests weren’t truly reusable since they worked at the UI layer (the part that probably changes the most), and there were never enough environments to run these tests in. After a while, so gunshy were the folks that had gotten burned, that anything in the test tools space might as well ceased to exist. That’s why we decided to focus on systems beneath the UI layer.

I’ve encountered less folks attempting to address the data problem, since security can bring the ax down on that quite quickly. But data scrubbing, virtualization, and ETL tooling is alive and well. Data is a critical part of understanding what your service will do in production, as it contains the context and use case that determines which branch of code is utilized. Without proper test data, verifying the quality of your next build is often pointless. Let Speedscale listen to your traffic (or feed us a tcpdump).

Environments/dependencies is the most typical of the three Threats I see with mature usage guidelines, process and understanding around. Servers, VM’s are oftentimes a fixed Capex that is easily measured, and bean counters have developed budgets that oftentimes dictate how accessible they are to engineering groups. Of course now with Cloud, Containers and Functions that’s all changing. With Containers standardizing the landscape, consistently simulating environments for everyone is within reach.

Orchestrating the Triple Threat in unison is the secret to streamlined software delivery. There wasn’t a tool that bundled the three together as a single artifact until now. If you have reams upon reams of test scripts, but the proper backend systems aren’t ready to run them, they’re unusable. If you have plenty of cloud capacity or brilliant IaC automation, but you rely on a 3rd party to do manual testing, you’re similarly constrained. Or perhaps you have sufficient test scripts and environments, but ETL processes to replicate, scrub and stage test data takes a long time between executions. Or maybe you’re not even sure of the right data scenarios to run. Or worse still, you can only test happy path because the applications rarely misbehave in the lower environments, versus how they error out in production.

That’s why, amidst mounting pressure to release new features rapidly and stay ahead of competitors, most companies test in production and rely on monitoring tools to tell them what’s broken. By focusing all their effort on making rollouts and rollbacks as fast as possible, they can now patch or pull out builds at the first sign of trouble. Therein lies the dilemma.

Fast rollouts and rollbacks were popularized by the unicorns, who typically have a huge pool of users they can test their updates on. If a few folks have a bad experience, they’re none the worse for wear (the unicorns have multi-millions of users). However certain industries such as fintech, insurance, and retail cannot risk customers having transactions fail. They’re heavily regulated, or have critical processes, or have razor thin margins where every visitor could generate revenue.

But we seem to think the ever faster we release/rollback, or the better we can do canary deploys, or the more intelligent we are about which aspects of the code we release first, the more stable and robust our software will become. We can’t drive southbound and expect to end up in Canada. We can’t do arm workouts every day and expect to squat more. We can’t have an unhealthy diet every day and expect to look like Thor (the in-shape one, not the drunk one). You get where I’m going with this? No amount of rollbacks, canary deploys, or blue/green improves our chances of code working right out of the gate, with minimal disruption, every release. We’re working on the wrong muscle.

In fact, according to the DORA State of DevOps Report 2019, the failure rate of releases between Medium to Elite performers were all the same, up to 15%. The average rate didn’t trend lower for Elite performers vs Medium. Why is that? They were classified as Elite, High or Medium by how often they release and how quickly they were able to react to issues. I’m not downplaying that capability, however we can’t expect production outages and defects to decrease if we’re constantly addressing how quickly we react. Software quality has to be proactive.

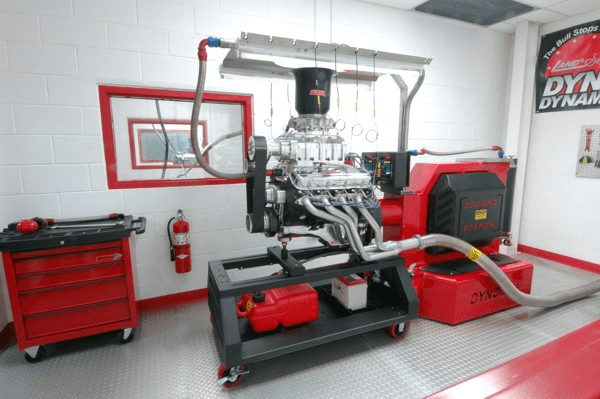

So how do we do that? You would figure out a way, ideally, to understand how your service is used and capture the transactions, scenarios, and thereby, the data. We would also systematically control variables, and apply the scientific method to test our code before release. In electrical engineering, chipsets are put into test harnesses to verify functionality independent of other components. Engines are put on dynamometers to confirm power output before installation into vehicles. Software is one of the few industries where we put everything together and turn it on and hope it works. What if plane manufacturers put a plane together for the first time and told you to takeoff? (I once heard a colleague muse they didn’t think they’d even get down the runway).

To isolate your new build, you need to simulate the inbound transactions that exercise your service. You also need stable predictable backends to respond to your dependency calls. If only there was a way to ephemerally provision and simulate these things, all with production-like test data, every time you commit…

————–

Many businesses struggle to discover problems with their cloud services before they impact customers. For developers, writing tests is manual and time-intensive. Speedscale allows you to stress test your cloud services with real-world scenarios. Get confidence in your releases without testing slowing you down. If you would like more information, schedule a demo today!