TL;DR

Today’s fast-paced development environments require new approaches to testing. Enter continuous performance testing. Unlike traditional performance testing, automated continuous performance testing is part of every build, monitoring the application continuously under increased load. With continuous performance tests, organizations with lots of users can expect to improve the customer experience, prevent major outages, and find and resolve issues faster. Companies should already have a CI pipeline in place to get started with continuous performance testing. Tools like Speedscale, with its traffic replication capabilities, can help get continuous performance tests off the ground faster and with less effort.

Overview

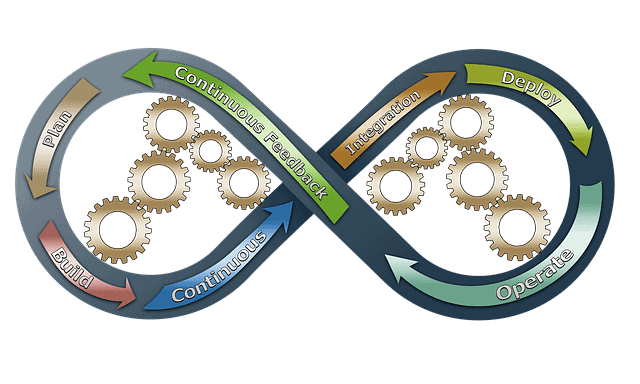

One of the most important phrases of DevOps practices is “Test early, test often.” Most developers know to get early feedback from automated functional tests, like unit and integration tests, but it’s equally important to check non-functional requirements, including system indicators such as security, scalability, and performance. Performance requirements like response time, stability, and speed are especially important. That means you need automated performance testing as part of your test process.

As markets become more saturated, companies no longer have the luxury of postponing performance testing until the end of the development process, when all features are developed. Continuous performance tests have been gaining popularity as more teams realize their impact. The importance and variety of performance testing tools cannot be overstated, as they help predict system capacity, enhance software quality, and cater to specific project needs.

This article will explain continuous performance testing and why it’s important. It will also share best practices and what you need to get started.

What is continuous performance testing?

Continuous performance testing, also known as a continuous performance test, is the continuous monitoring of the performance of an application under increased load. It’s typically done on every code commit, eliminating the need for manual performance tests that can be time-consuming and expensive. The key is to perform tests continuously, meaning you should add new test cases and update old ones as the application evolves so that your performance testing suite captures all relevant scenarios.

Are there different types of performance tests?

There are several types of performance testing, but one that is often used is load testing. Load tests are designed to simulate the activity of multiple users accessing software simultaneously. Similar to stress testing, the goal is to test the limits of how many requests the application can process without jeopardizing the system’s stability. Load tests are often automated with scripting languages for better execution control and accuracy. Traditional performance testing methods, typically executed after the software development cycle, differ from continuous performance testing, which is integrated throughout the development process for ongoing evaluation and prompt issue detection.

Who can benefit from continuous performance testing?

This depends upon the company’s needs, but generally speaking, some companies will find continuous performance tests more valuable than others. These companies typically have:

- A large user base

- High volumes of regular interaction and/or seasonal spikes in traffic

- Invested a lot of time or money into a project that has a long lifespan

- A large amount of staff available to them

Each company’s needs will differ in regards to what they need from a continuous performance testing system.

DevOps teams that work on small applications that don’t often see high spikes in traffic probably don’t need to invest in continuous performance testing. For example, if the application is a browser game where user actions are reasonably predictable and external inputs are moderate, there’s probably no reason to introduce continuous performance tests. Continuous performance testing for this type of application would likely reduce overall productivity more than improve anything. Instead, teams with these characteristics can focus more on doing periodic end-to-end tests, which gives them enough data to make meaningful decisions about architecture and trade-offs between the size of the application and heavy workloads.

Benefits & challenges

With continuous performance testing, companies can:

- Prevent major outages

- Improve the customer experience

- Ensure that performance service-level agreements (SLA) are met

- Ensure that performance doesn’t slip over time

- Find and resolve application performance problems faster

Integrating continuous performance testing is crucial as it provides a structured approach to enhance software development and CI/CD pipelines. This approach not only highlights the benefits but also offers practical steps to effectively incorporate it into projects, ultimately improving software performance and user experience.

However, some challenges can arise:

- It’s not always easy to automate

- It can be difficult to find test cases

- You can’t load-test everything

- You need to keep your tests up-to-date

- It isn’t always possible to test on live systems

How is it different from traditional performance testing methods?

When you mention performance testing, most developers think of the following steps:

- Identify all the critical features that you want to test

- Spend weeks working on performance test scripts

- Perform the tests and analyze pages of performance test results

This approach may have worked well when most applications were developed using the waterfall approach. However, times have changed. The waterfall approach is now mostly a thing of the past. Software development has become more agile, and as more teams use DevOps practices to develop and deploy their applications, the need for testing has changed—testing is now a part of the development cycle.

Traditional performance tests might be done after your release cycles or at certain milestones rather than as part of the CI process. However, automated continuous performance testing can be done often and as part of every build, enhancing user experience by proactively identifying performance issues.

Traditional load testing focuses on how a specific software version performs in peak load situations. Continuous load testing ensures that every new application version performs well in peak load conditions.

If a new version of the application doesn’t meet the necessary performance criteria, the whole build fails. After that, you need to go to the latest code changes and check and fix the performance issues. That means you can use automated continuous performance testing to validate code in CD pipelines, ensuring that there are no regressions in the performance of applications. Again, the goal is not just finding problems early but being able to fix them quickly before they affect customers or users negatively.

While some companies find that continuous load testing is a great idea, some factors make using this method difficult, such as a shortage of production-like environments for different API versions and the lack of test data around permutations of traffic.

Why you need continuous performance testing

According to the Akamai Performance report, 49% of customers expect webpages to fully load within two seconds. 18% of customers expect instant page load. In this day and age, users believe that waiting more than two to three seconds for a page to load is unacceptable. When they encounter issues, they’ll either a) leave or b) be the first to let you know about any sluggishness or time-out issues. Laggy sites lead to poor experiences, which can lead to customer churn and revenue loss. So, it’s important to measure performance now, not after a major outage.

It is crucial to integrate continuous performance testing into your software development process to meet user expectations consistently.

49% of customers expect pages to load within 2 seconds fully. With continuous testing, you continuously monitor how well your application responds to load. As a result, you can catch performance-related problems early and fix them before they become significant issues.

To summarize the need for continuous performance testing:

- It ensures that your application is ready for production

- It allows you to identify performance bottlenecks

- It helps to detect bugs

- It helps to detect performance regressions

- It allows you to compare the performance of different releases

Performance testing should be continuous so that an issue does not go unnoticed for too long and hurt the user experience. Continuously testing will show you what your server load looks like at any given time, thereby giving insights into servers’ capacity limits and bottlenecks.

How to start continuous performance testing

To begin continuous performance testing, first make sure that you have a continuous integration pipeline, or CI pipeline, in place.

Integrating continuous performance testing is crucial for enhancing software performance and user experience. Start by setting up a structured approach that includes defining performance goals, selecting appropriate testing tools, and automating performance tests within your CI/CD pipeline.

Step 1: Collect information from the business side

You need to consider the amount of requests you must be able to handle in order to maintain the current business SLAs. Also, you need to think about critical features of your application: is that the login, order processing, or checkout functionality?

Step 2: Start writing performance tests

The most straightforward approach is to start by testing the API layer. You can use tools like Speedscale, BlazeMeter, ReadyAPI from SmartBear, and Apache JMeter. There are plenty of tutorials on installing these tools, so this should not be overly complicated. Also, remember to store the tests under your main repository and treat them as first-class citizens. That means that you should pay attention to their quality.

Step 3: Select your use cases

The next step is to identify the scenarios you want to test. Here are some tips on how to write the best scenarios:

- Cover the most critical areas of the system first

- Base your test scenarios on the most realistic user usage

- Test for the end-to-end user experience

- Use concrete numbers instead of vague terms like “a heavy load”

Step 4: Collect results and fix issues

Finally, make sure to collect all your test results into a report that will be easy to read and understand. It’s vital to have meaningful reports so that you can plan your next steps well. The cycle of continuous performance testing doesn’t end once you collect the results though. You need to add all the performance issues to your product backlog with a plan to fix them accordingly. The results of the initial performance test represent the baseline for all future tests.

Ideally, you would perform the above process in a test environment that closely mimics the production environment, which is where tools that replicate production traffic, like Speedscale, come in.

However, a lot of companies still test in production because building test automation is time consuming, and maintaining prod-like test environments is expensive. Plus, it’s difficult to collect, understand, and replicate accurate data.

Performance Testing Metrics and Visualization

Understanding key metrics

In performance testing, metrics are the cornerstone for evaluating how well an application performs under various conditions. Key metrics such as response time, throughput, error rate, and resource utilization provide a comprehensive view of an application’s performance.

- Response Time: This metric measures the time it takes for the application to respond to user requests. A lower response time indicates a more responsive application, which is crucial for user satisfaction.

- Throughput: Throughput measures the number of requests the application processes within a specific time frame. Higher throughput means the application can handle more user interactions simultaneously, which is vital for scalability.

- Error Rate: This metric tracks the number of errors encountered during testing. A high error rate can indicate underlying issues that must be addressed to ensure reliability.

- Resource Utilization: This measures the amount of system resources, such as CPU, memory, and disk space, used by the application. Efficient resource utilization is essential for maintaining performance without overloading the system.

Understanding these metrics allows teams to pinpoint performance bottlenecks and make informed decisions to optimize their applications.

Tools for visualization

Visualizing performance testing metrics is crucial for identifying trends and patterns that might not be immediately apparent from raw data. Tools like Grafana, Kibana, and Tableau offer powerful capabilities for this purpose, allowing technical and non-technical team members to visualize the results of continuous performance tests.

Grafana: Known for its interactive dashboards, Grafana allows teams to visualize performance data in real-time. Its flexibility in integrating with various data sources makes it a popular choice for continuous performance testing.

Kibana: Often used in conjunction with Elasticsearch, Kibana provides robust visualization options and real-time data streaming. Its ability to handle large datasets makes it ideal for continuously monitoring performance metrics.

Tableau: Tableau excels in creating detailed and interactive charts and graphs. Its user-friendly interface makes it accessible for team members who may not be as technically inclined, ensuring that performance data is understandable across the board.

These tools help visualize data and make data-driven decision-making easier and more accessible when it comes to enhancing application performance.

Automated Testing and CI/CD Pipelines

Integrating performance tests in CI/CD

Integrating performance tests into CI/CD pipelines is a game-changer for maintaining application performance throughout the development cycle. By embedding automated performance testing into the CI/CD process, teams can catch performance issues early and address them before they escalate.

Tools like Jenkins, Travis CI, and CircleCI enable automating performance tests, ensuring they run with every code commit. This continuous approach means that performance tests are not an afterthought but an integral part of the development process.

Jenkins: Jenkins is a widely-used CI tool that supports a plethora of plugins for performance testing. By integrating tools like JMeter or Gatling, Jenkins can automate performance tests and provide immediate feedback on performance issues.

Travis CI: Known for its simplicity and ease of use, Travis CI can be configured to run performance tests as part of the build process. This ensures that any performance regressions are caught early.

CircleCI: CircleCI offers robust support for automated performance testing. Its ability to parallelize tests can significantly reduce the time required to run comprehensive performance tests.

By integrating performance tests into CI/CD pipelines, teams can ensure that their applications meet performance requirements consistently. This approach reduces the risk of performance-related issues in production and allows for more frequent and reliable testing, ultimately leading to a more performant and stable application.

Get ahead with continuous performance testing

Development teams should always be looking for ways to improve their processes. If you want to stay ahead of performance issues, provide an optimal user experience, and outsmart your competition, continuous performance testing is the way forward. Early application performance monitoring (before new features and products go live) saves time during the development lifecycle. With continuous performance testing, you prevent poor customer experiences with future releases. When you continuously test your infrastructure, you ensure that its performance does not degrade over time. Your team should have a goal and track results with metrics to ensure that you are making progress.