In the ever-evolving landscape of software development, every new feature, code change, or update carries with it inherent risks. These risks range from breaking previously working features with new code, to introducing new bugs. Although the severity of the risks can vary—a bug may cause severe financial losses or just move a button 2 pixels—it’s nevertheless crucial for your development process to reduce risk as much as possible.

For decades, engineers have tried mitigating these risks with various testing methodologies, leading to the rise of simple methodologies like unit testing, which have then evolved into more advanced methodologies like test automation and preview environments, with production traffic replication being the latest addition.

Reducing Technical Risks

From a high-level perspective, production traffic replications help mitigate two types of risks; technical and user-related. This section focuses on reducing technical risks like performance and scaling issues, as they’re often the root of user-related issues.

Catching Resource Consumption and Latency Issues

PTR captures traffic from any environment and then replicates it in any other environment, typically from production to a development or staging environment. Tools like Speedscale accomplishes this in Kubernetes by installing an Operator into the cluster to instruct any application with a sidecar proxy to capture and send traffic to Speedscale’s servers.

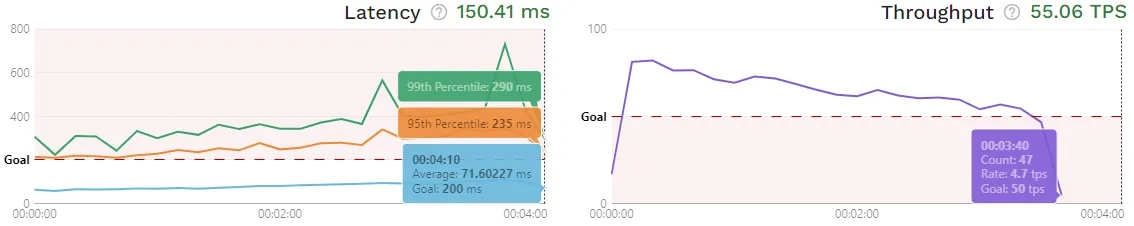

By continuously and automatically capturing production traffic, it’s much easier for engineers to validate new code before production, like resource and latency issues. Take a look at a report from the interactive demo generated from a traffic replay. You’ll see how the latency and throughput graphs have red-dotted lines defining the goals of this test.

Although not present in this report, these goals can also be set on memory and CPU. Setting resource goals can be a tedious and surprisingly complex task, as performance tests are typically done either by manual configuration or scripting, making it impossible to determine what resource usage can be expected as script-driven tests can never replicate production exactly.

Having a snapshot of captured traffic from production alongside recorded metrics, you’re able to see exactly what resource utilization is like under a specific load. Within a very small margin of error, this allows you to set accurate resource goals, removing the need to guess or evaluate.

However, while PTR takes care of many things, there are still some essentials to consider when running your own tests. For instance, are you using high-performing nodes in production but standard nodes in testing? How often are you running these tests? How are you integrating them into the development process as a whole? Are you using latency optimization methods in production like CDNs or load balancers, and do they need to be part of your testing as well?

Production traffic replication provides a solid foundation for realism and automation in your performance tests which you can then build on top of.

Validating Real-Life User Behavior

Every scripting-based approach has two major shortcomings: timing and distribution.

Imagine you’ve created an online chess platform where one request will directly influence the other, like a move changing subsequent valid moves. In these cases, accurately replicating request timing during load generation is crucial, as failure to do so can have severe consequences.

For instance, improper timing could lead the API to validate an illegal move as it hasn’t yet processed the opponent’s move, leading you to release what you think is a functioning API. Conversely, the API may invalidate a legal move causing engineering teams to debug a non-existent issue, which can be very costly in terms of engineering hours.

Additionally, false positives or negatives can be created from an inaccurate distribution of requests. Imagine the chess platform has two endpoints: /validate for validating a move and /execute for entering it into the database, with /execute only being called if the move is valid. /validate normally consumes 2 times more CPU than /execute, but a bug in the new code causes /validate to consume 2,2 times more CPU.

Realistically, /validate will be called more often as players will sometime try invalid moves—i.e. an uneven distribution of requests. For this example, let’s assume 10,000 requests happen over a period of 5 minutes, with a 60/40 distribution. Assuming /execute requires 1 CPU unit, the usage would look like:

- 6,000 2 + 4,000 1 = 16,000 CPU units

Testing with an even distribution would lead the calculation to be:

- 5000 2,2 + 5000 1 = 16,000 CPU units

Just a slight inaccuracy in the distribution causes a 10% increase in CPU usage for one endpoint to not be caught, as the API doesn’t seem to be consuming more CPU than normal. Scripting can often get you useful insights, but only traffic-driven tests can get you accurate insights.

Preparing for Upcoming Changes or Events

Upcoming changes, such as product launches or marketing campaigns, can be stressful for engineering teams as they often lead to an increased load on the application. Traditionally, preparation for this may involve load and stress tests to validate the application’s ability to handle the increase in traffic, like if autoscaling is done fast enough. But, like in the previous section, any script-driven approach is limited in terms of how realistic the results can be.

Properly preparing for these scenarios is crucial as it often means an influx of first-time visitors. An existing user may look past the occasional bug, whereas a first-time visitor will get a bad impression and likely leave the site, never to return. In other words, downtime caused by an influx of traffic can invalidate all the efforts of the product launch or marketing campaign.

That said, determining the maximum throughput of your application isn’t limited to preparation for upcoming events, but can help gain a better understanding of your application as a whole, likely leading to optimized resource limits and scaling rules.

Boosting Code Coverage and Reducing Exposure

Code coverage is a metric that represents the percentage of your application’s code being tested, and high code coverage is always desirable as it reduces the likelihood of undetected bugs and performance issues. In essence, the previous sections describe how PTR helps increase code coverage—e.g. by testing unexpected user behavior—which is ultimately the key to reducing exposure to risks.

Note that code coverage is that it’s not merely about testing all endpoints, as each endpoint will likely have variations. A paper from 2019 about code coverage at Google notes how “Google’s code coverage infrastructure measures line coverage…”, a common way of measuring coverage.

Every if-statement or other types of logical gate creates a new branch requiring testing as well, often seen when using an Authorization header to verify access. This is again corroborated by the paper on Google, “For C++, Java, and Python, Google’s code coverage infrastructure also measures branch coverage…”. Achieving high code coverage requires careful consideration about not just the requests but also what data is included in the request, in order to trigger all possible code paths.

Although this is theoretically possible with a script–driven approach, it’s not feasible for most given the amount of time it would take. While many tools exist to generate high test coverage in functional testing, production traffic replication is the first fully-fledged tool focused on bringing this to performance testing as well. As every request includes headers, parameters, and the request body, you can be sure to test any path triggered in production.

NOTE: When using production traffic it’s important to comply with any applicable regulations like GDPR on user traffic in testing. Speedscale’s DLP feature can help scrub PII before it leaves your network.

Lastly, while PTR helps cover all real-life user behavior, that won’t necessarily be helpful when developing a new endpoint for your API. For this reason, you’ll likely want a tool that can import something like Postman collections so you can still use the same tool for all of your performance testing.

Mitigate User-Related Risks

Using production traffic replication to validate deployments with real-world data ensures accuracy in your tests. It enables confidence when launching new features. And most importantly, it reduces overall exposure to risk. Altogether, you’ll be mitigating user-related risks like churn and lost revenue. On top of maintaining revenue, reducing the risk of bugs means more hours spent developing new features and a bigger ROI on labor costs.

If you’re leading a business, hopefully this has provided some insights into how PTR may fit into your development process. From here you can explore further by looking at how PTR helps reduce the cost of performance testing. For the engineers—or leaders wanting to discuss further with engineers—you may be interested in how PTR aids in optimizing Kubernetes clusters.