Give AI Coding Tools Real Production Context

AI generates code fast—but it's never seen your production traffic. Proxymock captures real requests, validates AI changes, and mocks dependencies so generated code actually works.

brew install speedscale/tap/proxymock# AI generates code, you validate against reality

cursor generate "add rate limiting to /api/orders"

🎯 Replaying 847 captured requests...

⚡ Comparing responses byte-by-byte

😑 Found 2 regressions in AI-generated code

POST /api/orders: missing "rate_limit_remaining" field

GET /api/orders/:id: latency +340% under loadWhy Proxymock?

Validate AI-Generated Code

AI coding tools lack production context. Replay real traffic against AI changes to catch regressions before they ship.

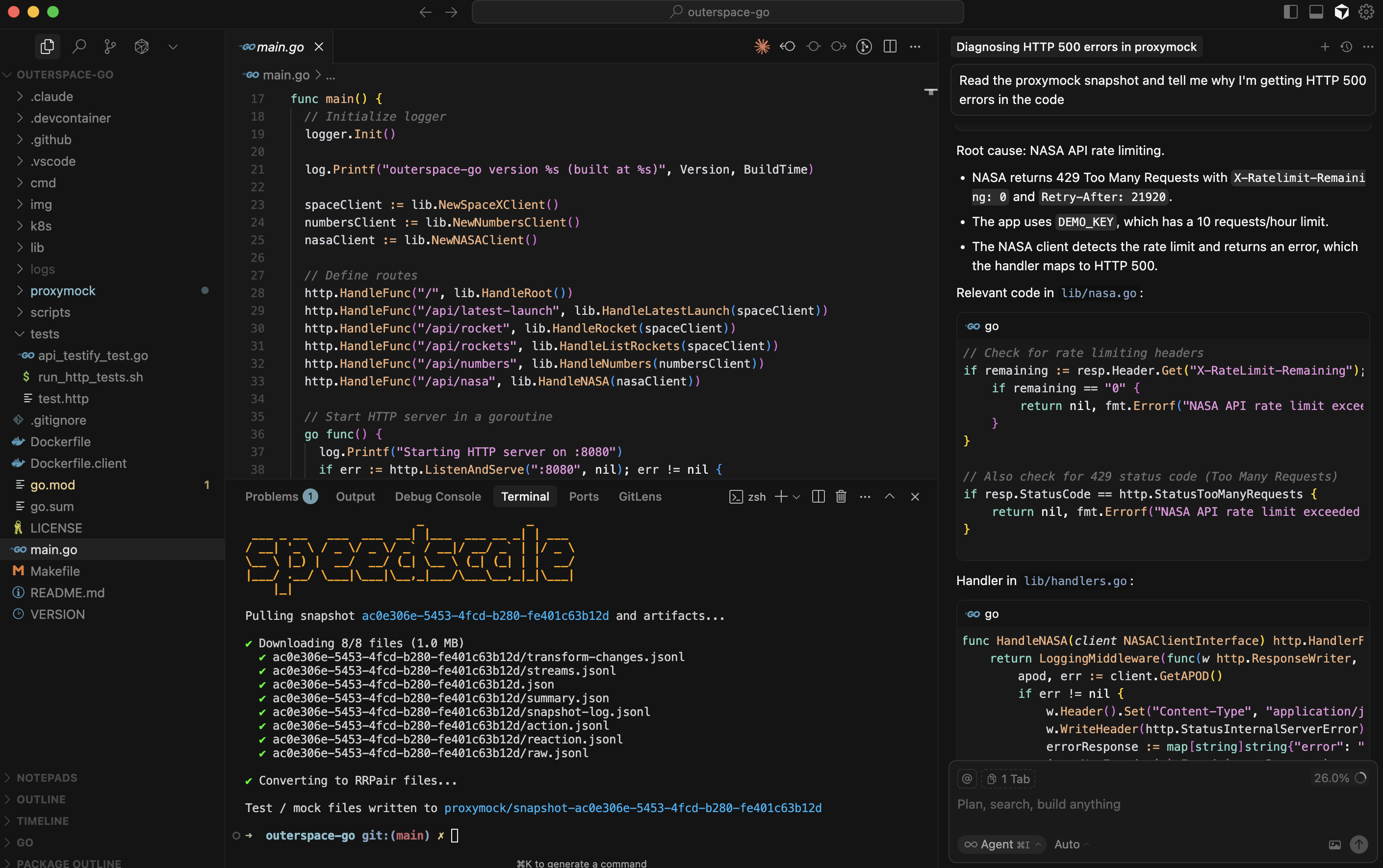

MCP for Claude, Cursor, Copilot

Give AI agents direct access to traffic snapshots and replay results via Model Context Protocol. The missing context, delivered.

Auto-generates Tests and Mocks

Stop writing test scripts. Generate comprehensive tests and realistic mocks from captured production traffic.

Capture from Anywhere

Record from Kubernetes, ECS, or your desktop. Replay anywhere—no expensive cloud replicas required.

CI Pipeline-Friendly

Block PRs that break against real traffic. Attach validation reports so reviewers see evidence, not just assertions.

PII Redaction Built-In

Automatically detect and redact sensitive data. Share traffic snapshots with AI tools without compliance risk.

Recording

Gather real traffic from your application using HTTP/SOCKS proxy, reverse proxy, desktop or even capture. Give your AI coding tool real world data to work with.

HTTP & gRPC

Capture REST and gRPC calls with headers and bodies preserved for replay.

Cloud Services

S3, Pub/Sub and other managed services are recorded for accurate mocks.

Databases

Postgres, MySQL and Redis interactions can be captured and analyzed.

Quickstart

brew install speedscale/tap/proxymock

export http_proxy=http://localhost:4140

export https_proxy=http://localhost:4140

proxymock record -- go run main.go

See the installation docs for more details and language specific instructions.

Testing & Mocking

Turn recordings into fast, deterministic tests and use production‑like mocks to develop without external dependencies.

Run tests

proxymock replay --test-against localhost:3000 --for 1m --vus 10Regression, contract and load from real traffic, quickly fire it up from your terminal.

Start mock server

proxymock mock -- go run main.goRealistic latency and errors; add transforms for chaos and data shaping.

The Missing Context for AI Agents

- 🎯

Give Claude Code, Cursor, and Copilot access to real traffic snapshots

- 🔄

AI agents can replay traffic, inspect responses, and validate changes

- 💸

No cloud costs—production context runs locally on your machine

AI Code Validation Workflow

From capture to confidence in four steps.

Capture Traffic

Record real API calls from staging or production

AI Generates Code

Cursor, Claude, or Copilot writes your changes

Replay & Compare

Validate AI code against captured traffic

Ship with Confidence

Fix regressions before they reach production

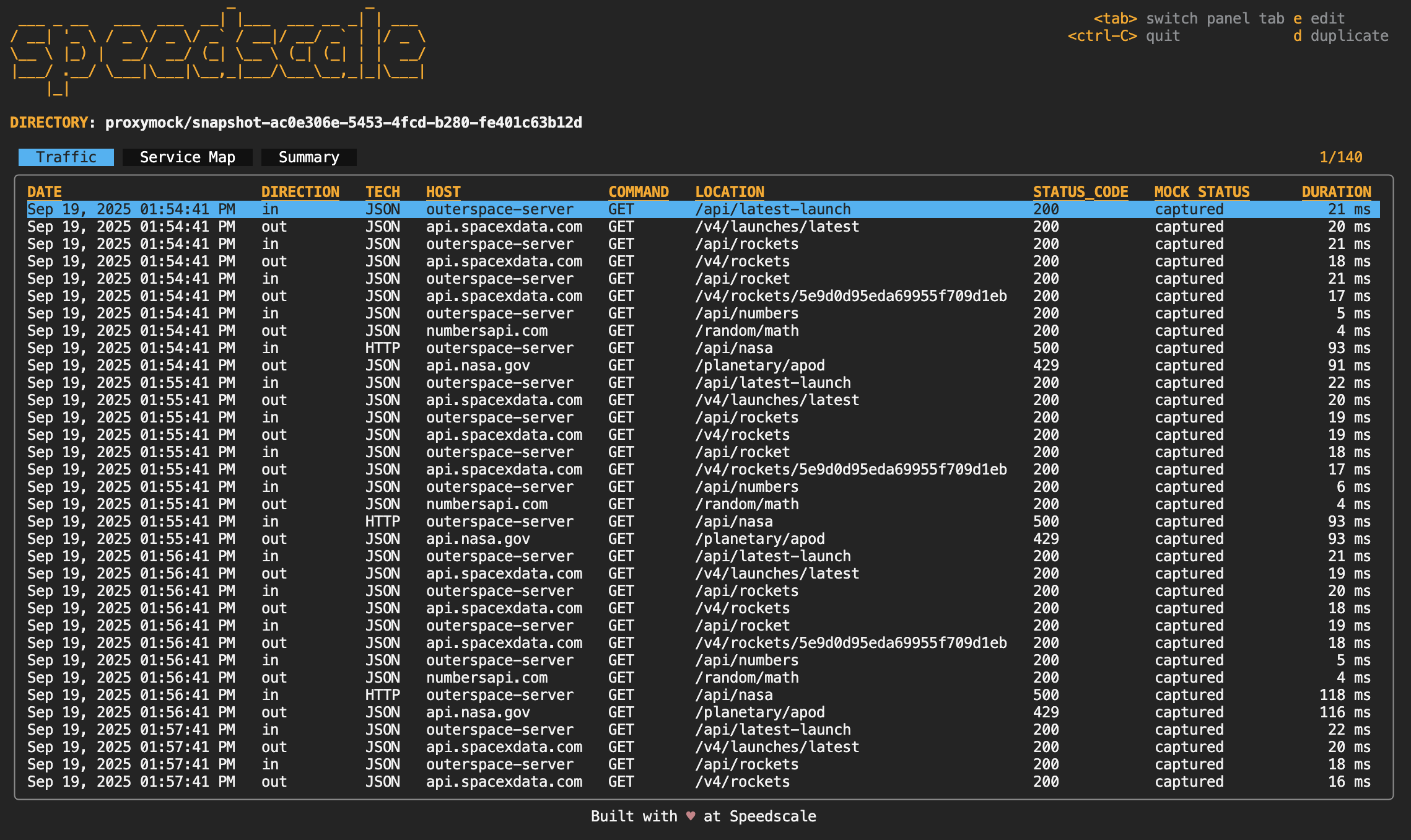

Deep Visibility

- 🛡️

Get visibility into full details of calls

- 🧰

See API, gRPC, GraphQL, DB queries, etc.

- 💸

Store traffic data as part of the repo

CI Pipeline

Add Proxymock to your pipeline to catch issues before deploy. See full examples in our CI/CD docs.

Start mock server

proxymock mock \

--verbose \

--in $PROXYMOCK_IN_DIR/ \

--log-to proxymock_mock.log &Replay and test

proxymock replay \

--in "$PROXYMOCK_DIR" \

--test-against localhost:$APP_PORT \

--log-to $REPLAY_LOG_FILE \

--fail-if "latency.max > 1500" \

-- $APP_COMMAND