Generative AI can produce code faster than humans, and developers feel more productive with it integrated into their IDEs. That productivity is only real if CI/CD tests are solid and automated.

When not appropriately tested, you may encounter a production issue that you haven’t seen before. According to the State of Software Delivery 2025 report, 67% of developers spend more time debugging and resolving security vulnerabilities in code generated by AI. That cancels out the efficient gains that they get from faster AI code generation.

To capture the benefits, you need a solid pipeline for testing AI code in CI/CD that automatically mitigates security risks and enforces code quality.

This guide covers how to build that pipeline, including best practices and the top tools to automate as much of CI/CD as possible.

Why Testing AI Code in CI/CD Matters

AI can generate code quickly and boost developer speed, but it’s also more prone to hallucinations and weak at handling edge cases. Adoption is high, with around 84% of developers using AI; however, trust is low, at only ~40%.

In a survey by Sebastian Cataldi, one developer noted, “I haven’t seen where it’s blatantly incorrect, but I’ve seen irrelevant suggestions.” Another admitted they trust only 80%.

This emphasizes the importance of testing AI code in CI/CD. Besides human oversight and code review tools, a CI/CD pipeline ensures that only reliable code reaches production.

Speed is one of the biggest promises of AI-driven development. But if you can’t automate code testing and quality checks in CI/CD, that promise breaks. Teams waste that time chasing AI errors manually and fixing them.

Moreover, CI/CD is about automatically testing every change with each integration. That means developers get instant feedback on code quality and security the moment they commit. Instead of waiting until the end of the software development lifecycle, they can fix bugs immediately while the code is still fresh and the cost of fixing is low.

Even when AI produces correct logic, regressions are a risk. Manually reviewing every impacted file is impractical and time-consuming. Automated CI/CD pipelines handle this by checking the entire codebase on every commit and flagging broken functionality with error logs, allowing developers to respond quickly.

Performance testing can also be automated inside CI/CD. That ensures AI code not only compiles but also runs efficiently, uses memory effectively, and scales for production workloads.

Overall, CI/CD testing not only improves AI code efficiency and correctness, but it also reduces security risks by blocking anything that fails quality gates. This reduces deployment risks and enables the release of reliable software.

Common AI-Specific Code Issues to Catch Early

Hallucinations

AI models are always built to answer, even when they don’t know. Instead of saying “I don’t know,” they make up responses. That means when you ask for something new or specific, the model may hallucinate and invent concepts that are not present in the data. The same thing happens in code: it generates libraries and packages that look plausible but don’t actually exist.

Research from Joe Spracklen and colleagues analyzed 576,000 Python and JavaScript code samples from 16 commercial and open-source LLMs. The results showed an average of 5.2% hallucinated packages in Python and 21.7% in JavaScript.

For example, pandas.summary sounds convincing that it may print the dataset summary. In reality, it doesn’t exist. The real risk is that these hallucinations aren’t random but repeated across use cases and different models.

So, a potential attacker could easily create malicious packages with these common hallucinated names. When an LLM picks them up and inserts them into your code, the attacker can access your data or inject vulnerabilities into your codebase.

Hardcoded credentials

AI sometimes generates code with API keys, passwords, or other sensitive information written directly into the source. If that code is leaked or pushed to a public repo, those secrets become an open invitation for attackers. The safer approach is to define clear rules that guide AI to reference environment variables instead. That keeps credentials out of source code and limits exposure.

Injection vulnerabilities

AI-generated code often skips thorough input validation. That gap creates an opening for injection attacks, where malicious input is used to run unintended commands, access sensitive data, or compromise backend systems. Without safeguards like parameterized queries or strict sanitization, attackers can turn these oversights into full system breaches.

Weak authentication

If you don’t explicitly set authentication rules in your prompt, AI can generate weak authentication snippets. For example, a weak user identification lets anyone in without properly validating credentials.

And even if the login works, the code might skip enforcing what that user is actually allowed to do. That’s how you end up with a regular user suddenly wielding admin-level powers, accessing sensitive operations or data, simply because no role checks were in place.

Performance bottlenecks

AI often focuses on getting code to work rather than generating the most efficient and performant code. Yes, the Python “for loop” works, but a list comprehension would be more efficient.

It also doesn’t address memory issues that are language-specific. For example, C++ doesn’t handle memory on its own. If AI-generated code forgets to deallocate memory, leaks pile up once buffers fill. And beyond memory, efficiency isn’t its strong suit. Instead of aiming for the lowest time complexity, AI often goes with “good enough,” which might pass in dev but can translate into sluggish response times (or worse, downtime) when your app hits production.

How to Integrate AI Code Testing into Your CI/CD Pipeline

CI/CD is built around four stages: source (commit) stage, build stage, testing stage, and deploy stage. Since AI-generated code can introduce security vulnerabilities and quality issues, it’s important to incorporate AI-specific tests across each stage of the CI/CD pipeline.

Source stage: The first step of the CI/CD workflow is managing source code in shared, version-controlled repositories like Git. Whenever a developer makes a local change, they merge it into the remote main branch so other developers can access it. Once a developer successfully commits or creates a pull request without merge conflicts, the CI/CD pipeline triggers. At this stage, initial quality checks, such as linting or syntax validation, can ensure AI-generated code follows predefined standards and style guidelines.

Build stage: Once triggered, the pipeline enters the build stage. Here, the code is compiled into executable or deployable artifacts. For example, Java applications are compiled into bytecode and packaged into JAR or WAR files. For containerized applications, a Docker image is built using a Dockerfile.

Testing stage: The testing stage is where unit tests and integration tests run against the code. Unit tests check whether individual components work correctly by verifying that given inputs produce the expected outputs for a feature or function. Integration tests validate that multiple components work together correctly, including interactions with APIs, databases, and other external systems.

With AI-generated code, this step becomes even more critical. Models often miss edge cases, fail under specific conditions, or behave unpredictably under high traffic scenarios not well represented in their training data. Without thorough testing, these weaknesses surface only after deployment.

Therefore, AI code requires extensive integration tests and load tests to validate how it performs in real-world scenarios.

Using Proxymock in CI/CD:

One way to strengthen testing for AI-generated code is by adding Proxymock to your CI/CD pipeline. Proxymock captures live traffic and request/response details from your current production. It then replays that same traffic against your new build in a safe test or staging environment.

This approach validates whether your new code handles the same requests that production is already seeing, while measuring latency, throughput, and error rates. In short, Proxymock helps you confirm that AI-generated code is production-ready without introducing unintended behavior or delays into live systems.

Here’s how you can integrate Proxymock into a CI/CD pipeline. For this demonstration, I use a Go project and GitHub Actions.

Inside my Go project, I have a `ci_cd.yml` file that defines my CI/CD pipeline in GitHub Actions. I’ve added a step that uses Proxymock to run both integration tests and load tests.

Step 1:

Copy your SpeedScale API key and add it to your repository secrets in GitHub. This ensures the key is available to your workflow without exposing it in the codebase.

Step 2:

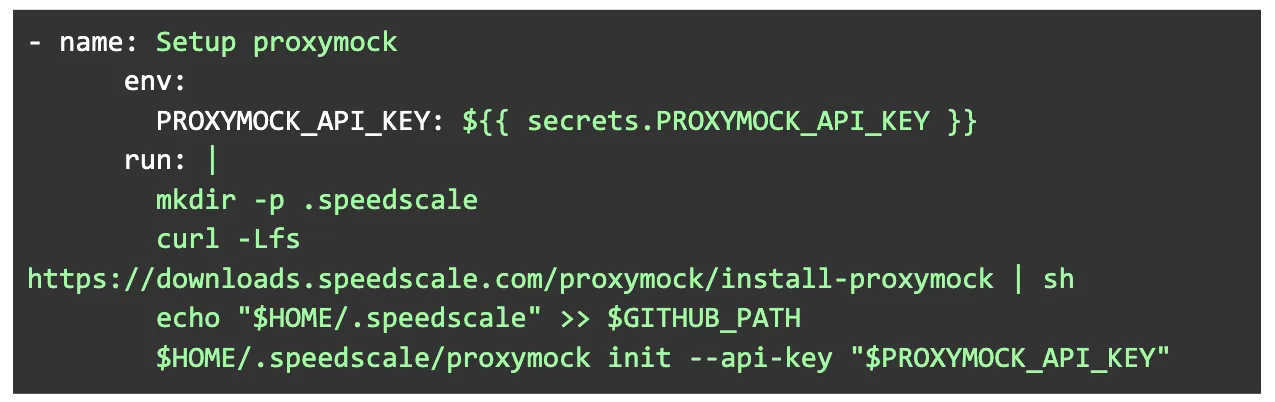

Inside your CI/CD pipeline script, add the following step before running tests. In GitHub Actions, the file usually resides in the directory `.github/workflows/ci_cd.yml`.

This script downloads and installs Speedscale Proxymock into `~/.speedscale` and adds that directory to the runner’s `PATH`, so proxymock is available for later steps in the workflow.

Step 3:

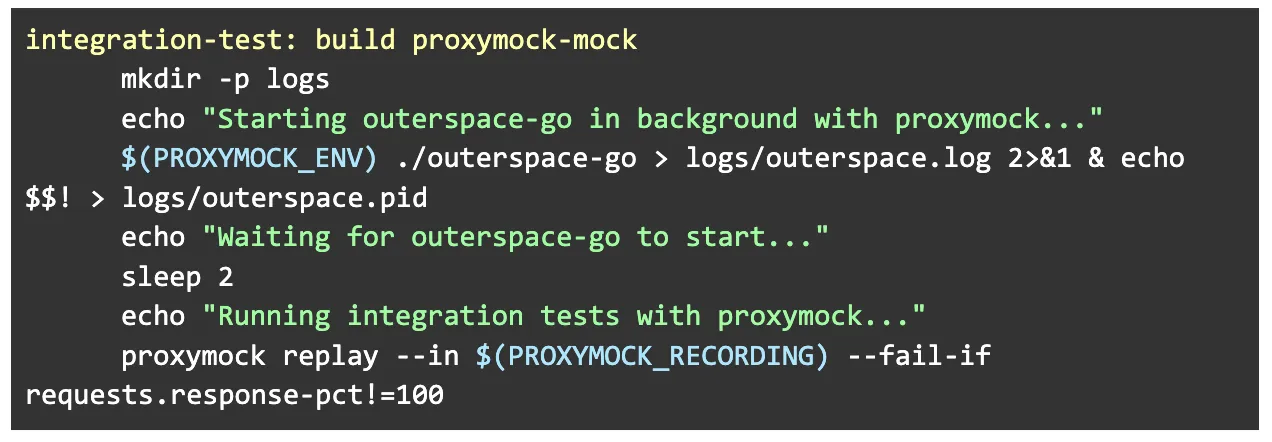

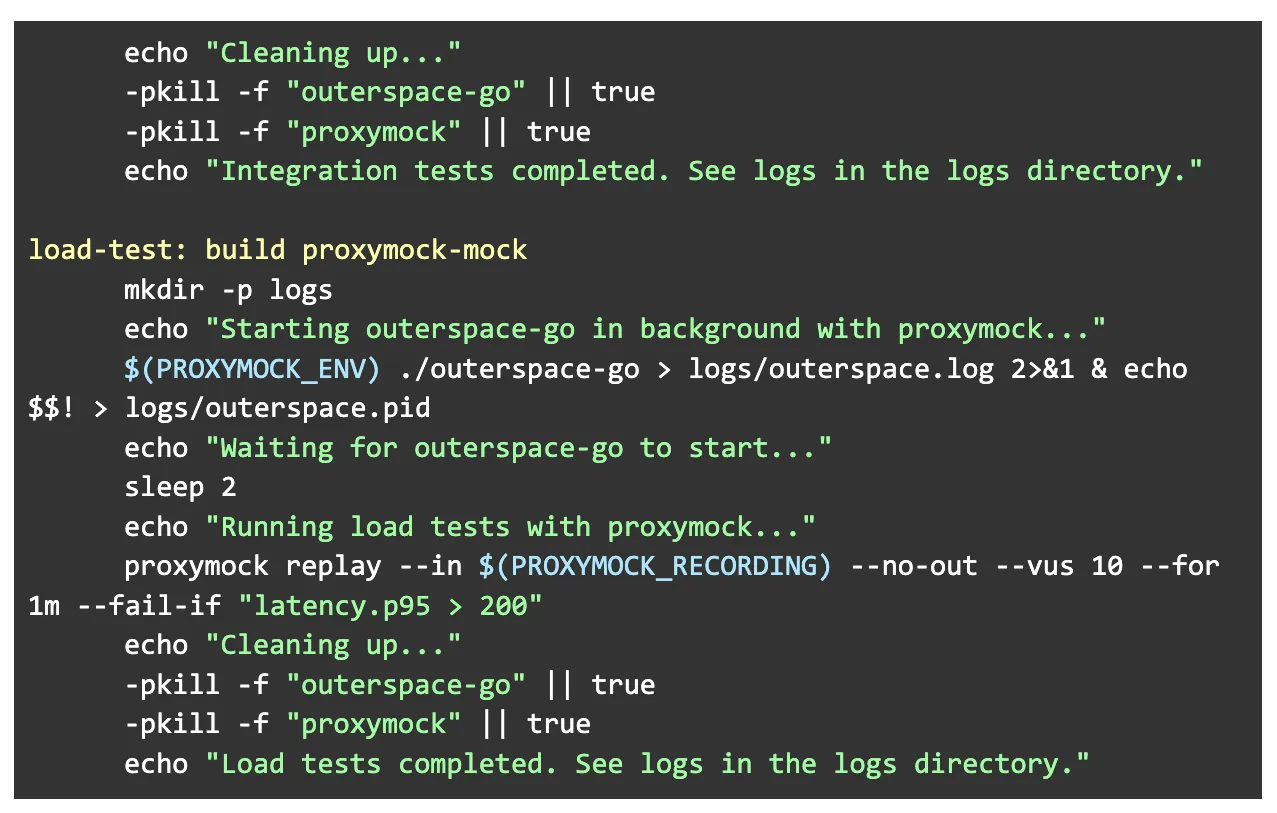

Open the Makefile and add the following test targets code snippet:

-

integration-test → checks correctness using Proxymock recordings

-

load-test → checks performance under load

Both start your app with Proxymock routing, run the replay, and fail CI if rules aren’t met.

Step 4:

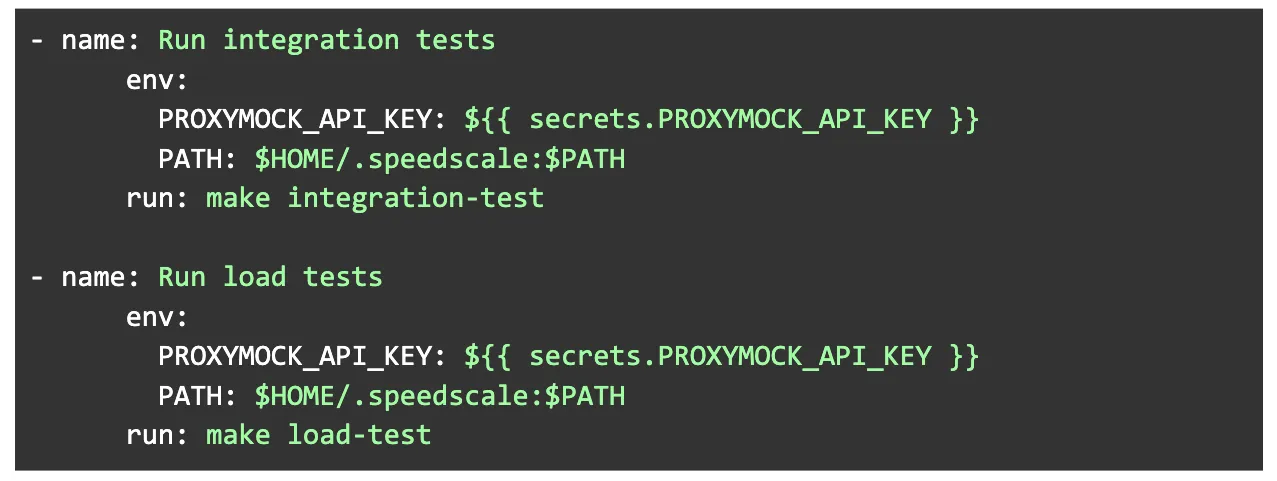

Open your CI/CD script (in my case, it’s located at `.github/workflows/ci_cd.yml`) and add the following snippet to call the Makefile targets in your CI/CD workflow.

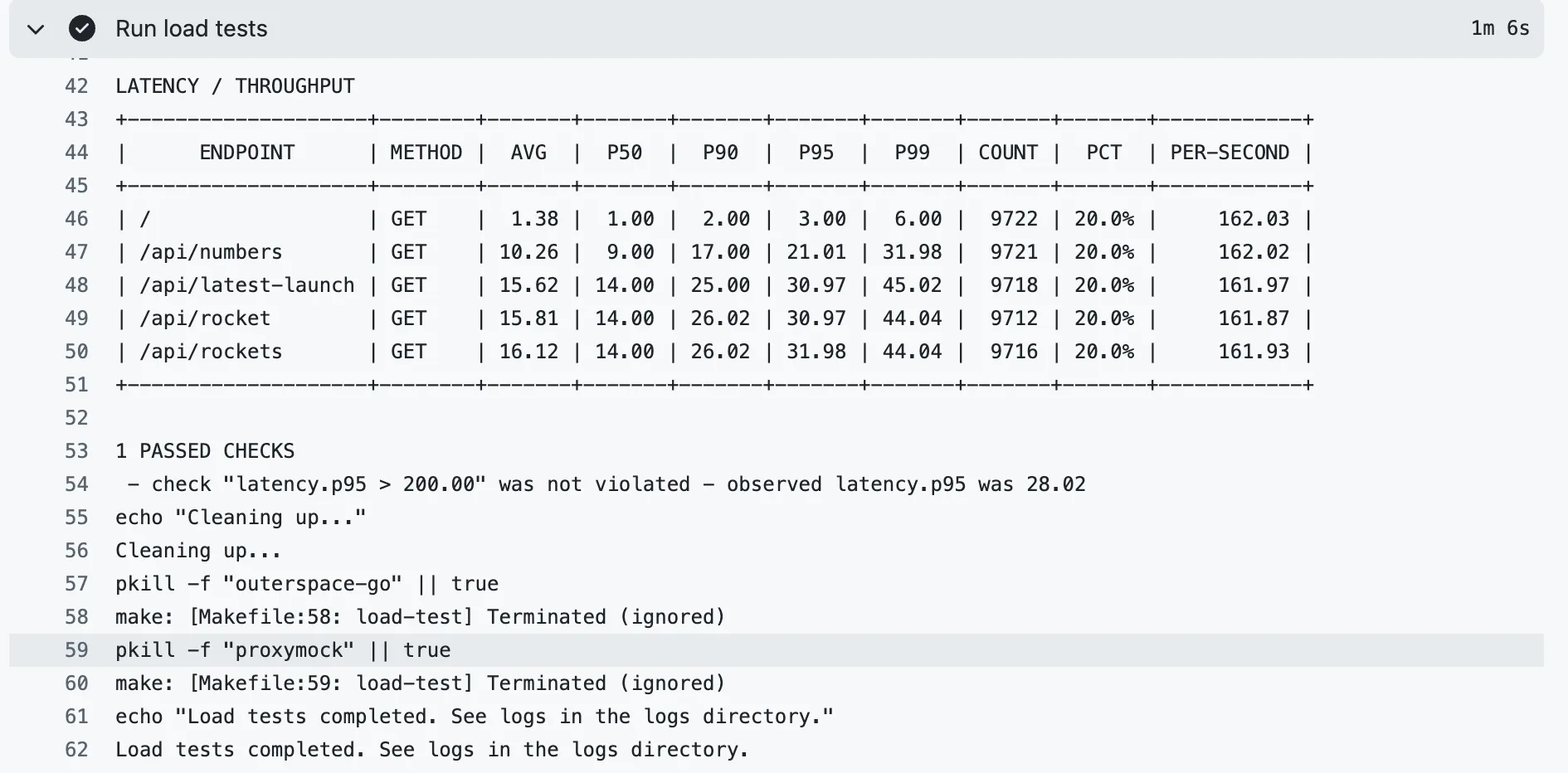

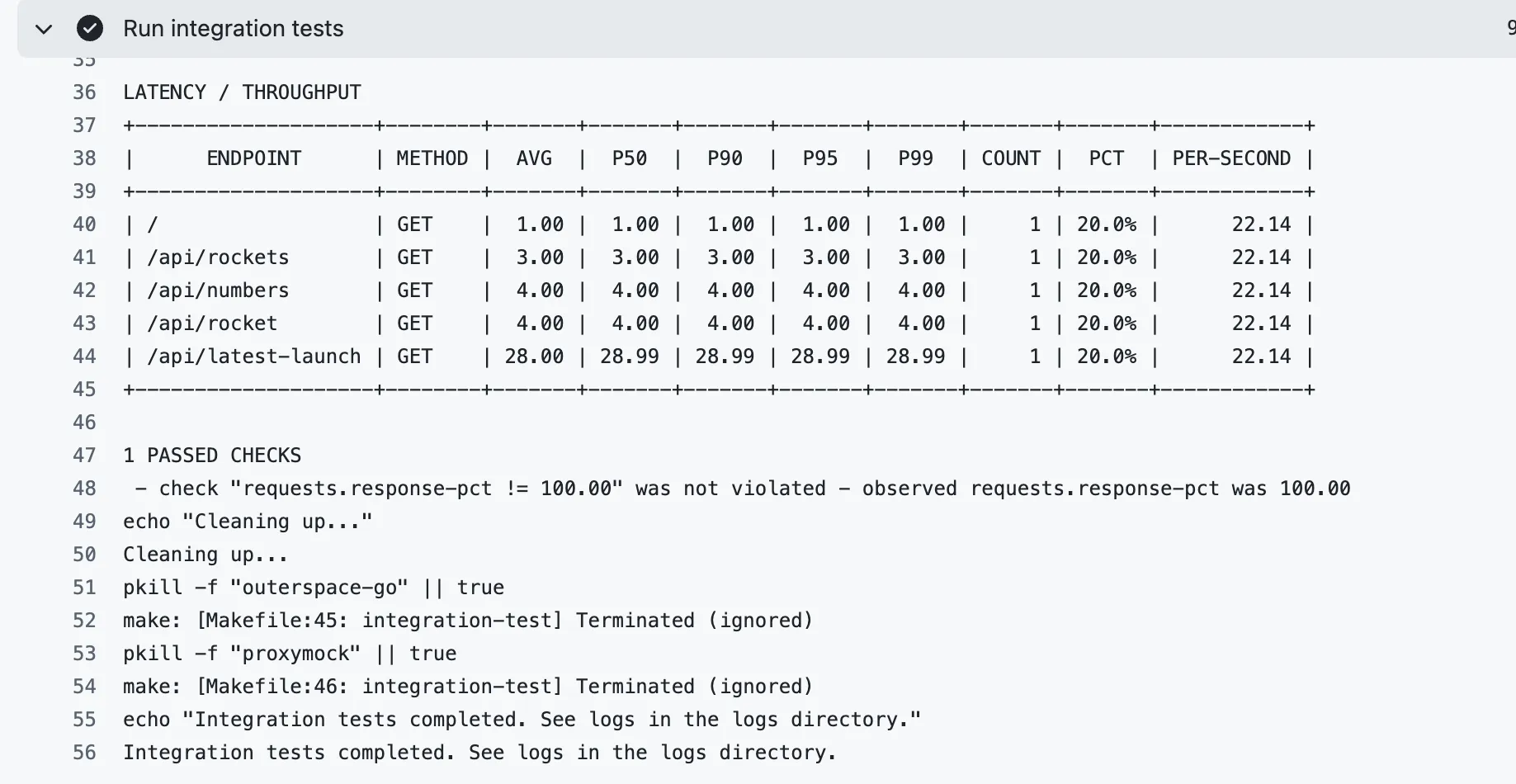

Now, whenever you create a pull request, the CI/CD flow triggers, runs the integration and load tests with Proxymock, and displays the success logs in GitHub Actions, as shown below:

In the above integration test, the traffic replay against five requests and all are successful.

In load tests, the run shows ~9.7k requests per endpoint over ~1 minute, ~160 req/s each), the service kept p95 latency ≈ 28.02, and stayed well under the set threshold p95 ≤ 200 rule. So the performance under load is within the range.

Best Practices for Reliable AI Code Testing in CI/CD

Configure AI tools to be security-first

Many developers install GitHub Copilot or Claude Code and immediately start generating code, but that approach isn’t security-first. Ideally, you should instruct the LLM to generate secure code. The problem is, repeating security guidelines in every single prompt isn’t efficient.

Most coding tools solve this with configuration files that let you define global rules. For example, Cursor lets you create a `.cursor/rules` file where you can add security instructions once, and the tool will automatically apply them to every prompt. You can also create project-specific rules and apply them only for a particular repository.

IDE-based security scanning

Secure coding today starts right inside the IDE. Adding a Static Application Security Testing (SAST) tool directly into your IDE provides a vital first line of defense. It enforces code quality standards, linting, and security guidelines as you write code.

This way, you can catch and fix issues immediately before they ever make it to your remote repository.

Integrate Code quality analysis tools into CI/CD

Beyond code quality checks in the IDE, SAST tools can extend their functionality into your CI/CD pipelines. As soon as a developer pushes code, static analysis tools like SonarQube, ESLint, or Pylint run to catch code smells, style issues, and security vulnerabilities before the build continues.

Sure, you can (and should) test for quality and linting in the IDE, but relying on that alone isn’t enough. IDE checks are developer-specific — different people may use different plugins, rulesets, or even versions of the same tool.

In CI, analysis runs with a centralized, standardized configuration. That means the whole team is evaluated against the same coding standards, and anything that slips through at the IDE level gets caught here. That’s why integrating SAST tools into your CI/CD is necessary.

Validate in real traffic

Instead of relying only on traditional integration tests, it’s crucial to observe how AI-generated code behaves in real-time scenarios. AI code may compile and even pass tests on known cases, but it often struggles with edge cases or unpredictable real-world conditions.

Performance is another concern. Without testing AI code in CI/CD, you can’t assume it will handle load effectively.

A good practice is to integrate a tool into your CI/CD flow that runs your AI-generated code against real traffic and load, while logging the results. This approach helps validate code under production-like conditions, giving you more confidence before deployment.

Continuous monitoring

Even after deployment, AI-generated code can introduce issues or vulnerabilities that only appear in production. Continuous monitoring is needed to detect these problems as they happen and provide logs for investigation.

You can build a custom monitoring solution or use established platforms like Splunk, Datadog, or New Relic. While not strictly part of CI/CD, continuous monitoring complements the pipeline by detecting issues that appear only in production. They monitor these recently deployed versions for infrastructure health, performance & security, custom metrics, SLO breaches, and more.

Best Tools for Testing AI Code in CI/CD

1. Speedscale

Speedscale captures incoming requests and outbound API calls from the live environment and replays that traffic against AI-generated code. It then checks whether the new version produces the same responses, ensuring functional consistency.

Speedscale also mocks or replays calls to databases, queues, and third-party services like Stripe, Twilio, or AWS. For load testing, it multiplies captured traffic by 10x or 100x to measure performance under scale. It integrates directly into CI/CD pipelines, so this validation step runs automatically.

2. StackHawk

StackHawk is a dynamic application security testing (DAST) tool with a shift-left approach. It automates security testing across REST, GraphQL, SOAP, and gRPC APIs, and integrates smoothly into CI/CD pipelines for fast feedback.

StackHawk simulates attacker behavior on a running version of your application and analyzes the outputs. It identifies vulnerabilities like XSS, SQL injection, and misconfigurations early, before they reach production.

3. Semgrep

Semgrep is an open-source static code analysis tool that evaluates source code for security vulnerabilities and coding patterns. It enforces secure coding standards, styling, formatting, and code quality as AI generates code by integrating directly into the IDE. Semgrep also integrates into CI/CD pipelines, where it checks for code quality issues and applies linters automatically.

4. Code coverage tools

Every piece of AI-generated code needs test coverage. Depending on the language, developers use tools such as JaCoCo or Cobertura for Java, Coverage.py or pytest-cov for Python, and Istanbul for JavaScript. These tools generate reports that show which parts of the codebase are tested and which are not.

SonarQube is another good option. While it does not measure coverage directly, it integrates with coverage tools and provides a centralized dashboard for managing code quality across multiple languages. It maps coverage reports back to source files and highlights untested sections. It also seamlessly integrates with CI/CD pipeline and adds coverage to the quality gates (e.g., fail the build if coverage < 70%).

Final Thoughts on Testing AI Code in CI/CD

AI code undoubtedly improves developer efficiency and speed, but it demands extra care in testing before reaching production to ensure reliability and accuracy. Beyond the usual syntax checks and functionality tests, you’re also hunting hallucinated packages and regression dependencies that AI code is more likely to introduce.

Performance and responsiveness are equally critical. Without proper load testing, you can’t guarantee that AI code will withstand production traffic.

Therefore, to address these extra AI-specific concerns, testing AI code in CI/CD pipeline is necessary.

Start by enforcing code quality standards and linting with static analysis tools, then write effective unit tests for individual components, and finally integrate a tool like Proxymock to perform integration and load testing in a production-like environment with real traffic.