How to Set Up API Observability with Open Source Tools, Istio and Kiali

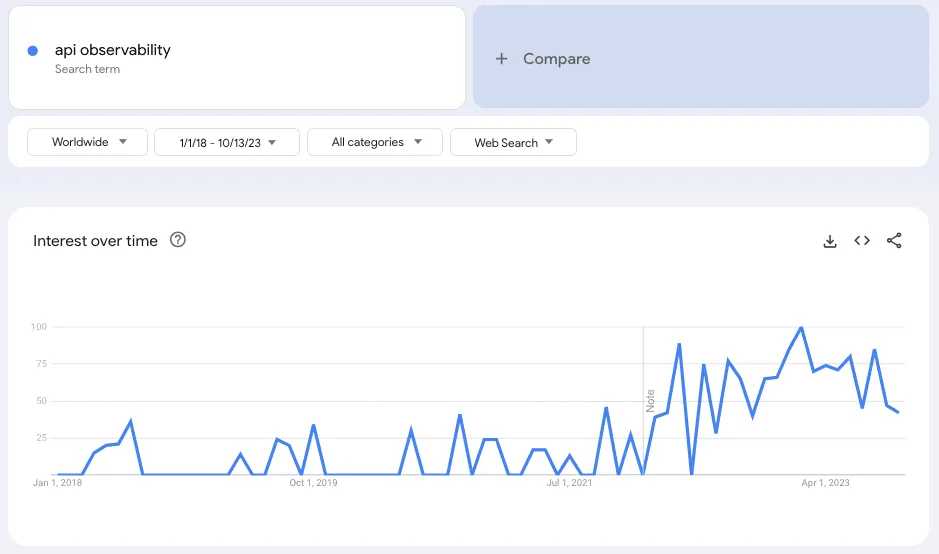

API observability isn’t exactly a new concept, however, its popularity has seen rapid growth in the past few years, according to data from Google Trends.

This article will cover what API observability is, how it differs from API monitoring, plus how to set up API observability using two popular open source tools, Istio and Kiali. Finally, we’ll introduce a new, cost-effective method for API observability using Speedscale, a traffic replication and replay solution.

What is API observability and why do you need it?

Imagine you’re running an eCommerce website, and one day you realize the process of adding an item to your cart becomes very slow. With traditional monitoring tools (the predecessor to API observability), you would have had to predefine and set up specific metrics that track the performance of the cart service. For example, a few of these indicators could include requests per second (to understand load) or error rates. For deeper information on this, check out the golden signals outlined in the Google SRE handbook.

Setting up metrics for each http request your service has, however, isn’t feasible, because it would cause an explosion of metrics.

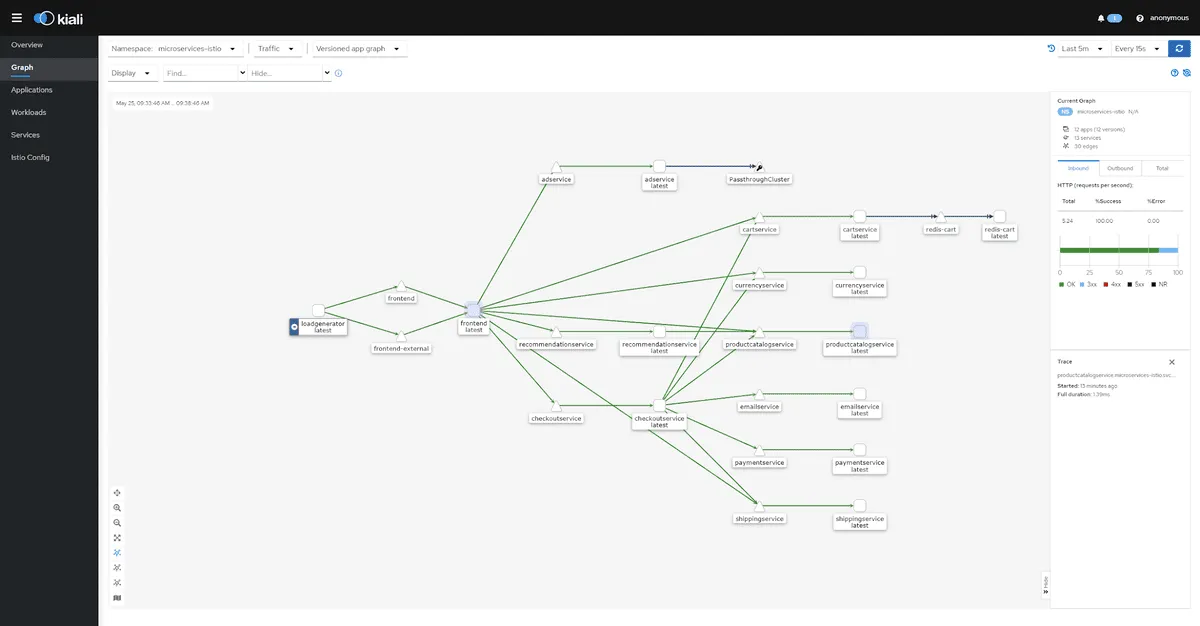

API observability puts monitoring into overdrive. Instead of just viewing singular individual metrics, you get a complete cohesive overview of your infrastructure. With API observability, you’re not only viewing the metrics you’ve set up for monitoring for, you’re getting a complete look into how your components are interacting. Instead of trying to guess what might be useful, you have a wide range of automated instrumentation to draw from. With the introduction of distributed tracing, API observability helps you see the entire journey of a request from the client to the database and back. With full tracing, you get much greater insight into how everything is performing end-to-end. The easiest way to understand this big picture view is with service maps.

In this day and age, where many organizations are running hundreds or thousands of microservices, you need observability in order to properly monitor your application and infrastructure. You can get deeper insights than what is possible with traditional API monitoring. Observability is so effective thanks to three principles: metrics, logs and traces. First, metrics help answer the “what”, like which services are down. Aggregated numerical data can identify problems quickly, but metrics alone won’t provide enough context. Logs then help answer the “why”, giving detailed insights from application code. Although logs are comprehensive, their verboseness can make finding the right logs feel like finding a needle in a haystack. Lastly, traces help answer the “where”, breaking down complex requests and visualizing the chain of interacting calls between your distributed microservices. Observability with metrics, logs, and traces creates a strong ecosystem for always understanding your infrastructure.

With observability, you would have detailed metrics on every single request that the service has made, and be able to make correlations to CPU and RAM usage at the time of the request.

Going back to our eCommerce website example, API observability allows you to see exactly what is happening with the cart service so you can understand the root cause and know why it’s taking a long time to add something.

How Speedscale compares to observability tools

Watch Video

Open source vs. commercial solutionAn open source observability solution has the obvious advantage of being free, which will attract many organizations, especially small companies like startups. Using open source will let you get functionality like API Observability without any upfront cost. Open source observability tools exist for almost anything: for metrics there’s Grafana, OpenMetrics, and Prometheus, for logs there’s the ELK Stack and OpenSearch, and for traces there’s Jaeger, Skywalking, and Zipkin.

However, the upfront cost is only one part of the equation. You always need to consider the Total Cost of Ownership (TCO) and capabilities of the solution. TCO encompasses everything that goes into setting something up, like engineering hours. It may very well be that you are spending so many engineering hours setting up and configuring something, that the most cost-effective solution is to buy a managed solution.

Commercial solutions like Datadog, New Relic, Dynatrace, Splunk, etc. also provide official support channels, which you don’t always get with open source. Many open source projects rely on the community for support. Whether open source or commercial is the right solution for you and your company is up to you, but remember to include all cost factors in your calculations.

Implementing API observability with Istio and Kiali

It’s assumed that you already have some prior knowledge with Istio on how it uses CRDs and sidecars before starting this tutorial. So as not to make any assumptions on the system you’re working on, let’s start out by downloading Istio:

$ curl -L https://istio.io/downloadIstio | sh -

Now move your terminal into the download directory. As of writing, the above command is downloading v1.13.4, so I’ll execute the command cd istio-1.13.4. Now you also need to make sure that istioctl is added to your path:

$ export PATH=$PATH:$PWD/bin

Now you can finally install Istio into your cluster by running:

$ istioctl install —set profile=demo -y

You’ll notice that the demo profile is chosen here, but in production you’d likely use the default profile. Now that Istio is installed you can start using it to instrument your applications. For the purpose of this tutorial, you’ll be deploying the microservices demo from Google. But, before deploying the demo you need to configure your Namespace to work with Istio. This is done by first creating the Namespace for the demo, and then adding a label to the Namespace:

$ kubectl create namespace microservices-istio &&

kubectl label namespace microservices-istio istio-injection=enabled &&

kubectl config set-context —current —namespace=microservices-istio

Now let’s download the microservices demo and deploy it to the Namespace:

$ git clone https://github.com/GoogleCloudPlatform/microservices-demo.git &&

cd microservices-demo &&

kubectl apply -f release/kubernetes-manifests.yaml

After a few minutes the microservices should be deployed, which you can monitor by running kubectl get pods.

At this point, your application is instrumented with the Envoy sidecar proxy, and it’s time to add the Kiali dashboard so you can view the Service Map. To do so, make sure you’re still in the Istio directory you downloaded earlier, then run kubectl apply -f samples/addons. This will add the following services:

- Grafana, a project by Grafana Labs, provides a data visualization dashboard

- Jaeger, a tool that helps implement distributed tracing

- Zipkin, another tool that helps implement distributed tracing and an alternative to Jaeger

- Kiali, an Istio add-on and the dashboard where you can get an overview of your infrastructure

- Prometheus, a Cloud Native Computing Foundation (CNCF) graduate project, is a metric collection tool

You can check the progress of the Kiali deployment by running kubectl rollout status deployment/kiali -n istio-system. Once you get the message ‘deployment “kiali” successfully rolled out’, you need to run istioctl dashboard kiali. This will create a tunnel to your Kiali dashboard and allow you to view your infrastructure.

If you are interested in how to extend this visibility using a commercial solution, take a look at the next section.

Replicating traffic: use cases & benefits

Watch Video

API observability with SpeedscaleUnlike typical API observability tools, Speedscale deconstructs request and response payloads to see the exact details of each call.

To get started with it, create a free account at https://app.speedscale.com. Then you can install the Speedscale CLI either using Brew (brew install speedscale/tap/speedctl) or using the install script (sh -c ”$(curl -sL https://downloads.speedscale.com/speedctl/install)”). You will be asked for an API key during the install, which you can find in the Speedscale UI. Verify that everything is working as intended by running seedctl check. If no errors are reported, you’re ready to instrument your applications.

You can instrument your applications with Speedscale in two ways. You can either manually add the needed annotations, or you can use the speedctl install command. The speedctl install command is arguably the easiest, as it guides you through the instrumentation. As opposed to how Istio does it, with Speedscale you should deploy your applications before you instrument them. So, start by deploying the microservices demo:

$ kubectl create namespace microservices-speedscale &&

kubectl config set-context —current —namespace=microservices-speedscale &&

git clone https://github.com/GoogleCloudPlatform/microservices-demo.git &&

cd microservices-demo &&

kubectl apply -f release/kubernetes-manifests.yaml

Now you’re ready to instrument the applications by running the speedctl install command. Doing so will give you an output resembling the following:

$ speedctl install

|_|

This wizard will walk through adding your service to Speedscale. When we’re done, requests going into

and out of your service will be sent to Speedscale.

Let’s get started!

Choose one:

[1] Kubernetes

[2] Docker

[3] Traditional server / VM

[4] Other / I don’t know

[q] Quit

▸ What kind of infrastructure is your service running on? [q]: 1

✔ Checking Kubernetes cluster access…OK

✔ Checking for existing installation of Speedscale Operator…OK

Choose one:

[1] default

[2] istio-system

[3] kube-node-lease

[4] kube-public

[5] kube-system

[6] microservices-istio

[7] microservices-speedscale

[8] speedscale

[q] Quit

▸ Which namespace is your service running in? [q]: 7

▸ Add Speedscale to all deployments in the microservices-speedscale namespace? Choose no to select a specific deployment. [Y/n]:

ℹ With your permission, Speedscale is able to unwrap inbound TLS requests. To do this we need to know

which Kubernetes secret and key holds your TLS certificate. Certificates are not stored in Speedscale

Cloud nor are they exported from your cluster at any time.

▸ Would you like to unwrap inbound TLS? [y/N]:

The following labels will be added to the microservices-speedscale namespace:

“speedscale”: “true”

The following annotations will be added to deployments:

sidecar.speedscale.com/inject: “true”

sidecar.speedscale.com/capture-mode: “proxy”

sidecar.speedscale.com/tls-out: “true”

▸ Continue? [Y/n]:

✔ Patching namespace…OK

✔ Patching deployments…OK

ℹ Patched microservices-speedscale/adservice

ℹ Patched microservices-speedscale/cartservice

ℹ Patched microservices-speedscale/checkoutservice

ℹ Patched microservices-speedscale/currencyservice

ℹ Patched microservices-speedscale/emailservice

ℹ Patched microservices-speedscale/frontend

ℹ Patched microservices-speedscale/loadgenerator

ℹ Patched microservices-speedscale/paymentservice

ℹ Patched microservices-speedscale/productcatalogservice

ℹ Patched microservices-speedscale/recommendationservice

ℹ Patched microservices-speedscale/redis-cart

ℹ Patched microservices-speedscale/shippingservice

▸ Would you like to add Speedscale to another deployment? [y/N]:

Thank you for using Speedscale!

Looking for additional help? Join the Slack community!

Now your application is instrumented with Speedscale, and you can log onto https://app.speedscale.com to view your service connectivity.

The Speedscale Service Map provides some of the same visibility as Kiali. However, in addition to a simple service map, Speedscale allows you to inspect the details of each individual transaction:

Unlike open source solutions, Speedscale can provide deep inspection of the headers, query parameters and response bodies of each request. Kiali and Speedscale both provide excellent visibility but Speedscale takes things a step further with no code modifications.

Get a personalized demo and see Speedscale in action

Schedule Demo

Open source observability vs. SpeedscaleBy now, you’ve seen how quickly you can implement API observability using open source tools like Istio and Kiali. You’ve also seen an example of using Speedscale, a commercial solution that provides similar but also complementary functionality. Which one of these options is the right one for you? It ultimately depends on the needs and use cases of your organization. Open source has no upfront license cost, but will require significant engineering hours to install, maintain, and use. The Speescale solution will be more streamlined, require less maintenance and setup, and will provide deeper visibility, but it comes with an upfront cost.

BLOG

Traffic viewer for API visibility in Kubernetes clusters

Learn More

DOCS

Product guide: creating a snapshot with Speedscale

Learn More