The Trust Gap in AI-Generated Code

AI coding agents are on the verge of transforming software development but only if engineering leaders can trust them. Right now, three fundamental concerns hold back adoption:

- Correctness: Does the code actually do what it’s supposed to?

- Security: Does the code introduce vulnerabilities that could harm the business?

- Cost: Does the AI run into infinite reasoning loops that run up your bill?

The challenge is rooted in a fundamental mismatch: AI is stochastic, but software engineering is deterministic.

AI models are probability machines—they generate the “most likely” answer based on patterns in data. These models are expected to perform problem solving and other specific tasks, such as code generation or prediction, enabled by advanced technology. Underlying these capabilities are machine learning techniques and neural network architectures, which allow AI to identify complex patterns in large datasets. Neural networks, especially those with multiple hidden layers, excel at extracting features and improving accuracy in deep learning models.

Software, however, lives in a binary world where a build either passes the test suite or it doesn’t. There’s no “close enough” in production. Without advanced tools or approaches, accurately replicating production traffic for testing is often impossible, making it extremely challenging to ensure AI-generated code is reliable before deployment.

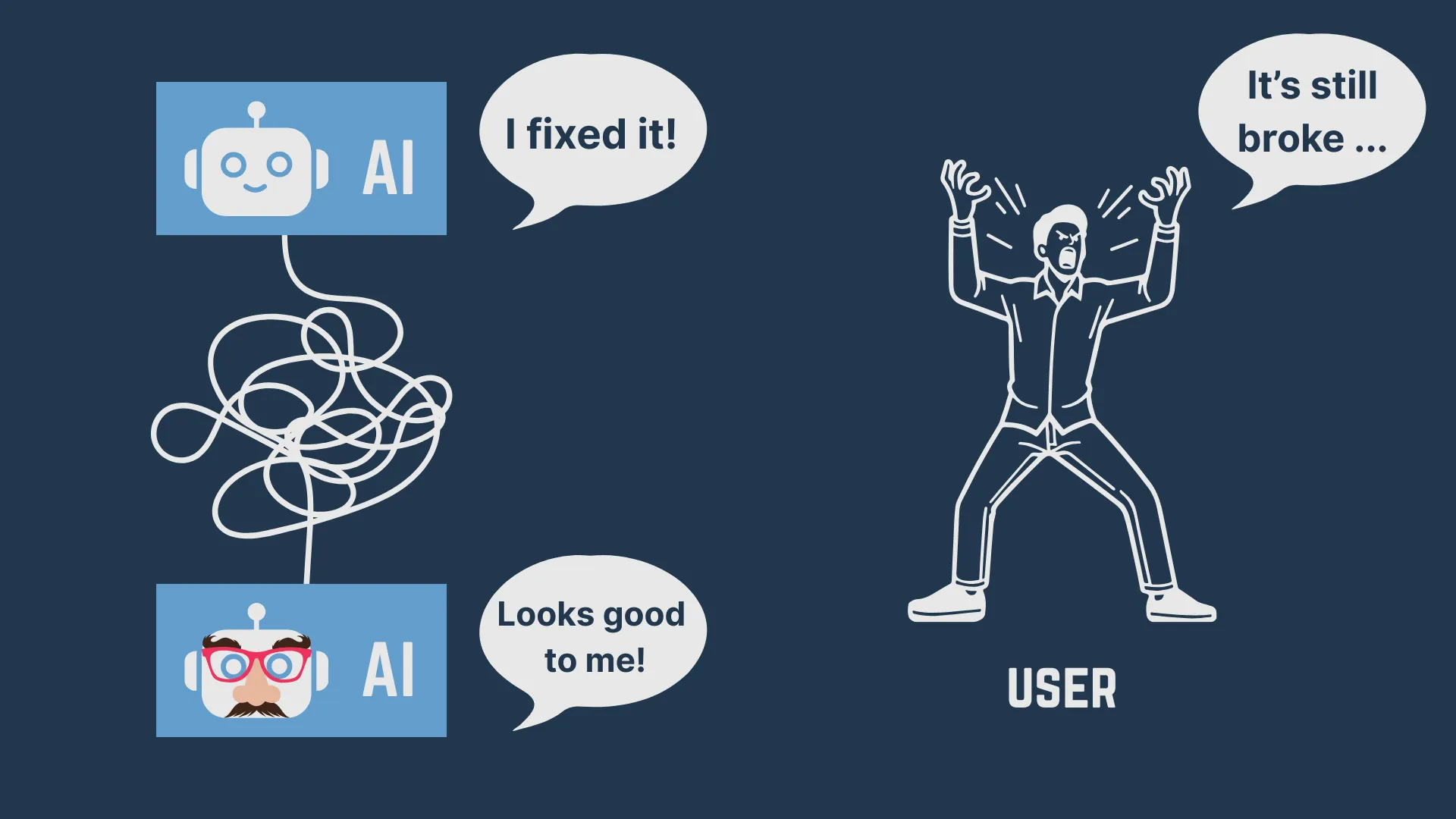

The Current Risk: Faster Bad Code

Without a deterministic validation process, AI coding assistants risk simply generating more bad code faster. Speed without correctness is not progress, it’s technical debt on an exponential growth curve. Left unchecked, teams could end up spending more time debugging AI-generated output than they save in initial coding effort.

Users attempt to overcome AI’s gaps by giving the AI more compute time. This does work for some kinds of problems, but it is not uncommon to see some LLMs attempt the same solution ten or more times unless a human steps in. Additionally, the cost of running advanced reasoning models appears to be still increasing. This type of runaway cost is disturbing for a single engineer but for a team of 100 or more the bill can be staggering.

Deterministic Testing

If AI coding agents are going to be more than autocomplete on steroids, they must be embedded in a development process that enforces deterministic correctness. That means every AI-generated change must be provably correct before it reaches production.

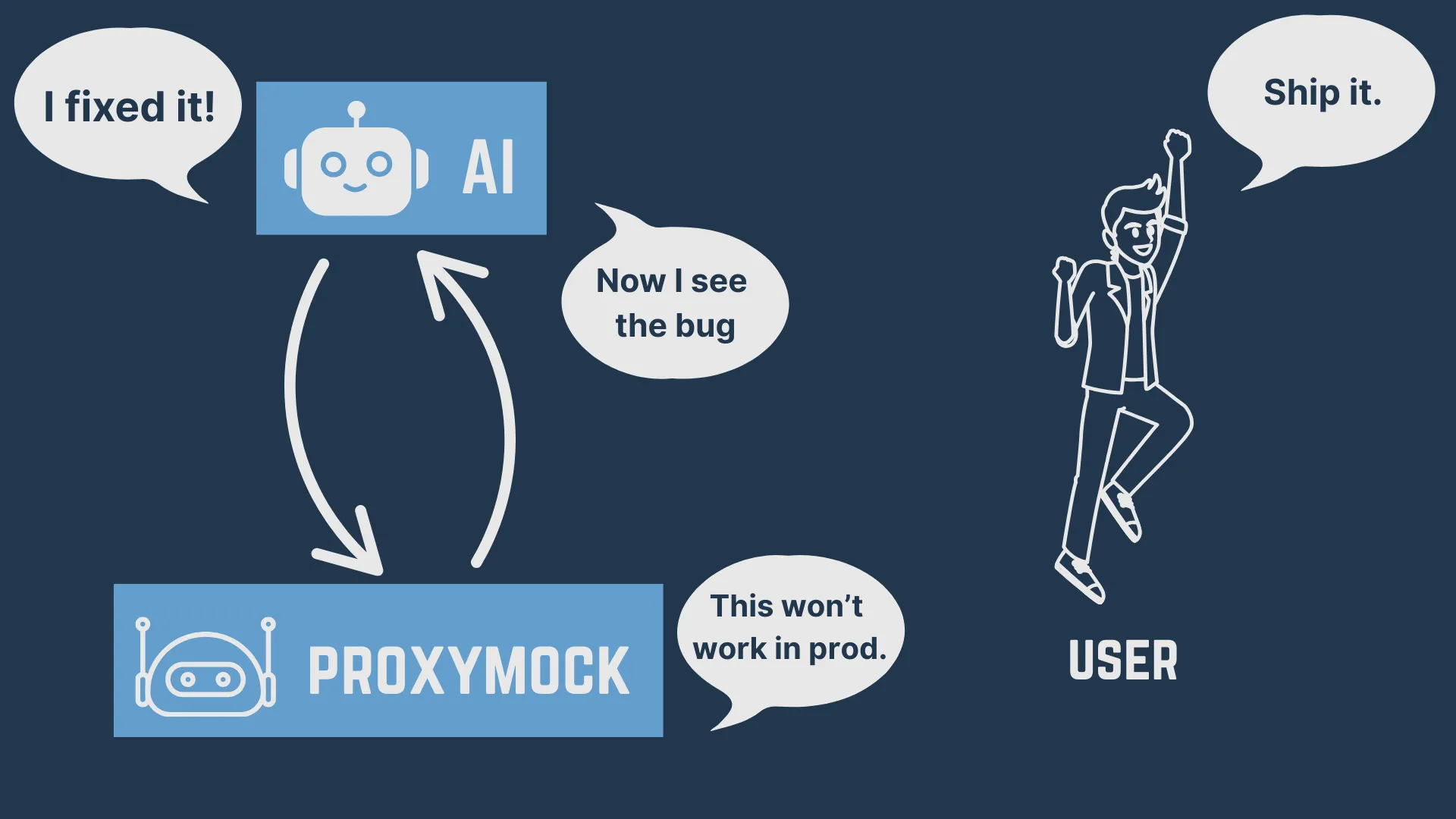

The key is to integrate deterministic testing steps directly into the AI feedback loop—not just at human review time, but continuously as the AI iterates. Note that I did not say we need to bring back lengthy QA cycles because that type of outer loop destroys the velocity that we’re trying to achieve. Instead, we need to create an inner loop development cycle where the AI is working with an AI tester without extensive human supervision. This type of rapid back-and-forth lets the AI make better decisions while consuming less compute time and thus performing tasks with lower cost.

One of the most effective ways to achieve this is traffic replay.

Traffic Replay Introduces Determinism

Traffic replay brings a new level of determinism to the testing process by allowing developers to capture and replay real production traffic. This method ensures that tests are not only consistent but also highly relevant to actual user behavior and system interactions. By leveraging traffic replay tools, developers can create test cases that accurately reflect real-world usage, making it possible to identify and fix issues before they impact users. For example, when testing AI-powered chatbots, replaying real user interactions helps ensure that the system responds appropriately in a variety of scenarios, reducing the risk of unexpected behavior in production. This process allows developers to confidently validate AI-generated code, knowing that it has been tested under the same conditions it will face in the real world.

proxymock: The Iteration Engine for AI Coding Agents

To operationalize this vision, we built proxymock:

- Seamless Integration: proxymock capturing real traffic flows and lets your local app think it is running in a real environment with actual backends and users.

- Deterministic Test Harness: The captured traffic becomes a replayable dataset the AI can run code against producing definitive pass/fail and performance results.

- Edge-Aware Validation: Because the test cases directly mirror real production behavior, proxymock is naturally more strict than the AI’s own reasoning.

- Dependency Simulation: Every modern app depends on cloud services, APIs and databases and proxymock simulates them based on watching the behavior in a real environment.

- Continuous Learning Loop: The AI gets immediate, deterministic feedback, allowing it to adjust code before a human even reviews it.

With proxymock, engineering leaders can give AI coding agents the deterministic safety net they need to be trusted contributors to the codebase. Proxymock leverages neural networks and deep neural networks as underlying models, enabling advanced capabilities such as natural language processing, understanding human language, and generating or validating images.

AI coding agents will only realize their full value when they are held to the same deterministic standards as human engineers. Traffic replay, powered by tools like proxymock, can close the trust gap and unlock AI’s potential without compromising on correctness or security. Industry leaders such as Google leverage advanced AI, neural networks, and traffic replay to enhance their products and development workflows.

To learn more visit proxymock.io.