Turn AI Code Changes into Validated Tests

- ✨

AI generates code fast—traffic replay proves it works

- ✨

Auto-generate tests from real traffic, not synthetic data

- ✨

Catch regressions AI introduces before they reach production

Why AI-Generated Code Needs Traffic-Based Tests

AI coding tools optimize for syntax correctness, not production reality. They've never seen your actual traffic.

What AI Has Never Seen

- Your actual API request patterns and payloads

- Edge cases users hit daily (emoji, Unicode, legacy formats)

- How third-party APIs actually respond (including errors)

- Timing-dependent behaviors under real load

What Traffic-Based Tests Catch

- Every scenario your production system handles today

- Behavioral regressions AI changes introduce

- Contract violations that break downstream consumers

- Performance degradation under realistic load

Automated Test Generation

Zero Scripting

Generate comprehensive test suites from recorded traffic without writing a single line of test code.

Real User Scenarios

Tests are based on actual user interactions, capturing edge cases and complex workflows.

Continuous Updates

Keep tests current by automatically updating them as your APIs evolve.

Generated Test Types

Integration Tests

Generate comprehensive integration tests that validate API interactions, data flow, and system behavior across multiple services.

- API endpoint validation

- Request/response matching

- Cross-service workflows

Digital Twin Mocks for AI Development

Give AI coding tools realistic backend responses without hitting live systems. Mocks are auto-generated from real traffic—no manual stub maintenance.

- Third-party API mocks (Stripe, Salesforce, Twilio)

- Database responses (Postgres, MySQL, Redis)

- AI agents get production context locally via MCP

How It Works

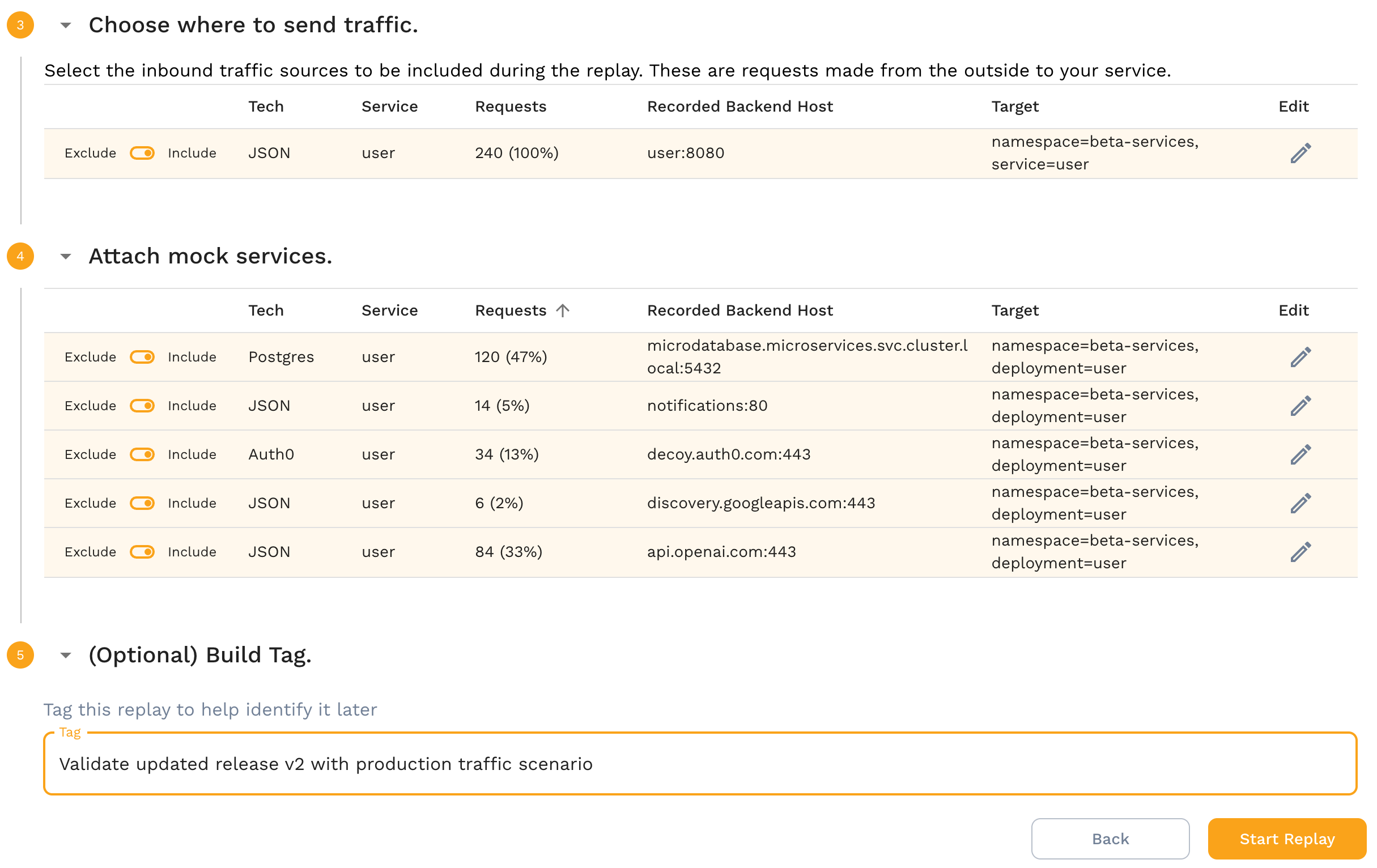

Capture Traffic

Record live API interactions and user flows

Auto-Generate

Convert recordings into tests and mocks automatically

Parameterize

Intelligently handle dynamic data and variables

Execute

Run tests in CI/CD or use mocks for development

Ready to validate AI code with real traffic?

Stop hoping AI-generated code works. Prove it with traffic-based tests that catch what static analysis misses.