Production Traffic Capture

Record HTTP, gRPC, GraphQL, and database traffic from Kubernetes, ECS, or local environments. Automatic PII redaction keeps compliance teams happy.

AI coding tools generate code 10x faster—but they've never seen your production traffic. Speedscale captures real request/response patterns and replays them against AI-generated changes, so you ship code that actually works.

Three steps to validate AI-generated code against production reality.

Record your production reality

Capture real API traffic, database queries, and third-party interactions from production or staging. PII is automatically redacted. No synthetic data, no guesswork—just actual request/response pairs that represent how your system really works.

Build production context instantly

Speedscale auto-generates realistic service mocks from captured traffic. Your AI coding tool gets a complete backend environment—databases, third-party APIs, microservices—without spinning up expensive infrastructure or hitting live systems.

Validate AI code against reality

Replay captured traffic against AI-generated code changes. Compare responses byte-for-byte. Catch regressions in behavior, latency, and contract compliance before the code ever reaches production.

# 1. Capture traffic from production

proxymock record -- java -jar MYJAR.jar

# 2. AI writes code

claude OR opencode OR cursor OR copilot

# 3. Run the mock server to simulate reality

proxymock mock -- java -jar MYJAR.jar

# 4. Replay realistic scenarios to find new defects

proxymock replay --fail-if "latency.p95 > 50" --fail-if "requests.failed > 0"

2 EVALS PASSED

✔ passed eval "latency.p95 > 50.00" - observed latency.p95 was 46.00

✔ passed eval "requests.failed > 0.00" - observed requests.failed was 0.00The difference between code that compiles and code that works in production.

| Capability | Standard AI Coding | Traffic-Aware AI Coding |

|---|---|---|

| Test data source | Synthetic data, mocked responses, developer assumptions | Real production traffic patterns, actual API behaviors, genuine edge cases |

| Environment fidelity | Unit tests pass in isolation; integration breaks in staging | Production context replicates topology, dependencies, and data shapes |

| Edge case coverage | Limited to scenarios developers anticipate | Includes every edge case that production has actually encountered |

| Third-party API testing | Manual mocks that drift from reality; expensive sandbox calls | Auto-generated mocks from real API responses; zero live API costs |

| Regression detection | Catches syntax errors and type mismatches | Catches behavioral regressions, latency spikes, and contract violations |

| CI/CD integration | Tests pass but production fails; 'works on my machine' | Traffic replays run in every PR; validation reports block bad merges |

| Time to confidence | Days of manual QA after AI generates code | Minutes to validate against thousands of real scenarios |

Diff view shows expected vs. actual responses for every replayed request

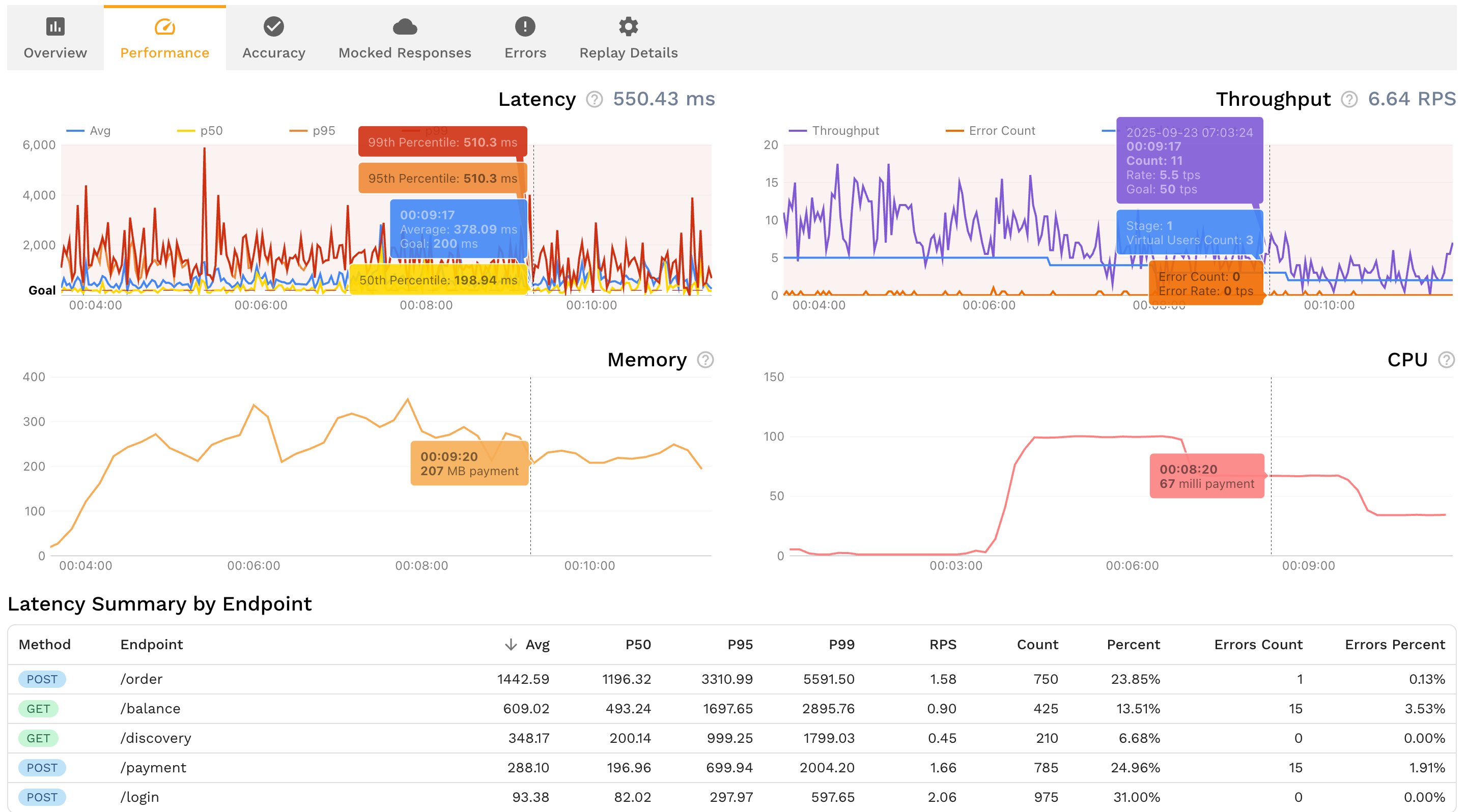

Latency histograms reveal performance regressions before they hit users

Contract violations highlighted with exact field mismatches

One-click export to share with AI agents for automated fixes

Everything you need to verify AI-generated code against production reality.

Record HTTP, gRPC, GraphQL, and database traffic from Kubernetes, ECS, or local environments. Automatic PII redaction keeps compliance teams happy.

Turn captured traffic into realistic mocks of Stripe, Salesforce, Postgres, Redis, and any other dependency. No manual stub maintenance.

Replay traffic and diff actual vs. expected responses. Catch payload mismatches, missing fields, and schema violations that static analysis can't see.

Give Claude Code, Cursor, Copilot, and other AI coding tools direct access to traffic snapshots and replay results via Model Context Protocol.

Multiply captured traffic 10x or 100x to stress-test AI-generated code. No synthetic load scripts that miss production's actual traffic shape.

Attach machine-readable test results directly to GitHub, GitLab, or Bitbucket PRs. Reviewers see exactly which calls passed or failed.

The earlier you catch issues, the cheaper and faster they are to fix. Runtime validation integrates at every stage—from local development to production deployment.

Give Claude Code, Cursor, and Copilot direct access to production traffic via Model Context Protocol. AI agents see real request patterns and edge cases while writing code, generating better implementations from the start. Validate changes instantly in your local environment before committing.

Run traffic replay locally through the Proxymock MCP server before submitting your pull request. Verify that AI-generated code handles real production scenarios, catches regressions, and maintains API contracts—all before your code enters team review.

Automate runtime validation in GitHub Actions, GitLab CI, or Jenkins. Every pull request runs traffic replay against AI-generated changes. Validation reports attach automatically to PRs, blocking merges that introduce regressions or break contracts. Reviewers get evidence, not assertions.

Run final runtime validation when deploying to staging or production environments. Capture fresh traffic from the deployment target, replay it against the new version, and validate performance, contracts, and behavior under production load before releasing to users.

The earlier you validate, the faster and cheaper issues are to fix. Runtime validation with production context shifts detection left—from expensive production incidents to quick local fixes.

Production scenario coverage with traffic replay vs. 40-60% with synthetic tests

Reduction in production incidents by catching issues before deploy

Less time writing tests—auto-generate from captured traffic

Give your AI coding tools the production context they need. Capture production traffic, validate every change, and ship with confidence.

Step-by-step guide to integrating Proxymock into your CI/CD pipeline for AI code validation.

ROI AnalysisHow traffic replay testing delivers 9x ROI through reduced infrastructure and incident costs.

ToolFree CLI for local recording, testing, and mocking APIs from real traffic.