Picking the right performance testing tool can be a challenge. What should you look for and what is important?

Performance testing is a phrase many developers have come across at some point, but what is it exactly?

In simple terms, performance testing is a software testing practice used to determine stability, responsiveness, scalability, and most important, speed of the application under a given workload. The goal of performance testing is to eliminate any performance bottlenecks in the application.

These tests collect several indicators of performance, such as:

- CPU memory consumption

- browser information

- server query processing time

- number of acceptance

- number of acceptable concurrent users

- page and network response times

- number/type of errors that may be encountered when using an application

All this data gives you insight into how your application would perform in production with thousands of concurrent users. There are many performance testing tools on the market to enable performance testing, but today we’ll compare Speedscale and Locust briefly.

Comparing Speedscale and Locust

Speedscale is a managed performance testing solution designed specifically for Kubernetes. You can test your API with real-world scenarios and simulate the traffic as a part of your continuous integration pipeline without needing to write any scripts.

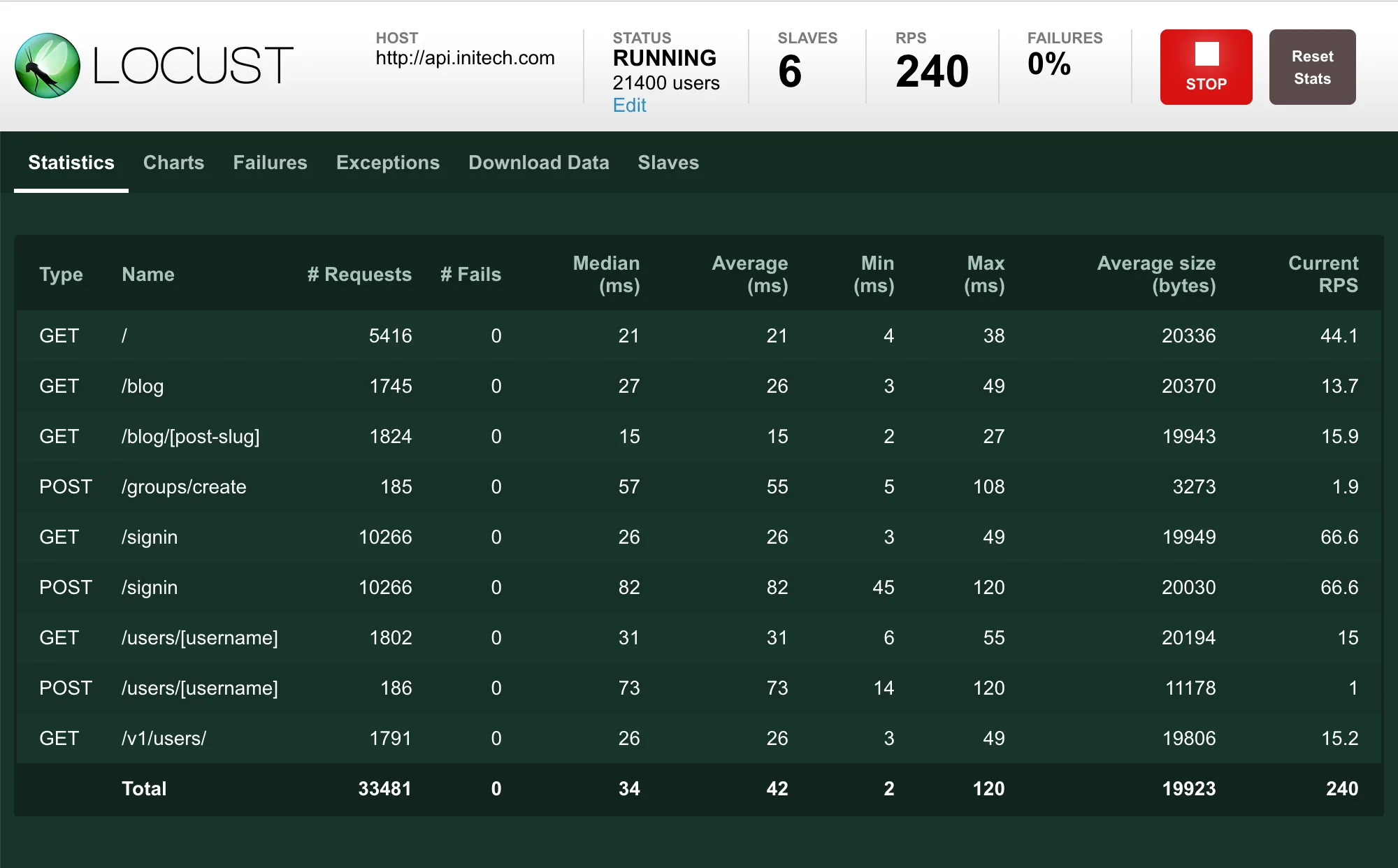

Locust is an open-source load testing tool written in Python. You can write a test against your application in Python, which simulates user behavior and runs the test at scale to find performance issues and bottlenecks. Every process in Locust can be monitored from a web UI in real time.

Goals of the Tools

Picking up the right tool for your workflow can be challenging. Does the tool integrate well with your system? Is it providing the functionality you want to use?

Speedscale aims to be the best performance testing tool designed for Kubernetes. It’s a totally managed solution and doesn’t require you to write any code/script for tests. Its main objective is to integrate with your Kubernetes CI seamlessly and test the new code changes against the past traffic.

Locust’s goal is to simulate the behavior of your users and swarm your application with regular Python code instead of being constrained by a domain-specific language or a UI. This makes Locust very developer-friendly.

Setting Up the Tools

Speedscale has its own CLI tool called speedctl, which can be installed in your Kubernetes cluster with a simple kubectl apply -f command. After speedctl is configured, everything else related to the deployment can be configured using annotations.

Best of all, this step doesn’t require you to have a deep knowledge of Kubernetes. If you’re stuck at any point, there’s an excellent support team ready to help.

Since Locust can also be used as a Python library, you can install Locust via Python’s package manager pip using the command pip3 install locust. There’s also a beta version of Locust available.

Running Locust on distributed machines like Kubernetes, however, is not that straightforward. You can use open-source scripts like the one provided by Google and set up the tool on your Kubernetes cluster using kubectl apply -f command. You can read more about the installation of Locust on Kubernetes here.

Unmanaged vs. Managed

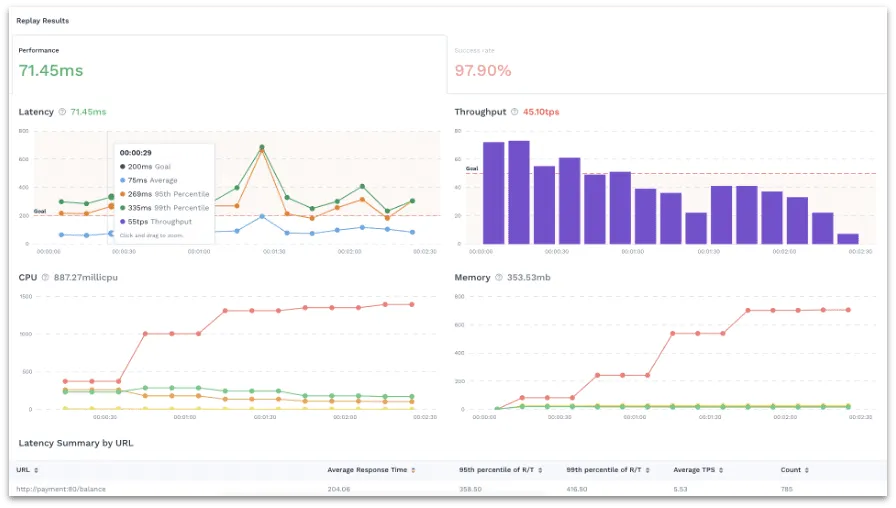

Since Speedscale is a managed solution, they take care of the uptime of the web dashboard. All the metrics are passed from your Kubernetes cluster to the dashboard where you can see your cluster’s performance metrics and even can control the tests without the need to code at any point.

Locust, being an open-source performance testing tool, tends to be an unmanaged solution. If anything goes wrong with the connecting Kubernetes services, you need to debug and take care of the issue yourself.

Writing Tests

Speedscale takes on all the burden of writing code for your tests and enables you to autogenerate mock use cases from your dashboard, without the need to write code. This offers an advantage over traditional load testing since you don’t need production grade pods to execute a replay. A simulation of high inbound traffic is much cheaper. All the results and tests are available on the web UI, where you can go deeper into every response and edit the tests as necessary.

Depending on the scenario, it may be necessary to write multiple tests in Locust, and maintaining them can be pretty cumbersome, which leaves lots of room for error. Even if you know Python quite well, it could be tricky to tackle some issues since Locust doesn’t have a user-friendly UI for test creation or documentation for all its features.

Traditionally all the test files written in Python are stored in a particular folder, and Locust accesses this folder containing the test files to perform load testing. When you need to make any changes to a test, you have to change the test file.

Developer Experience

With Speedscale, it’s effortless to look at how your application will perform under a production load without actually needing to write any complex scripts. You can spin up a snapshot of your backend and run isolated performance tests without affecting the production. You can also simulate how your application/service will respond to an error, non-responsiveness, and random latencies.

You’ll have to write test scripts for Locust, which has its downsides:

- The process is slow and tiresome

- Maintaining scripts and editing them is a difficult task

- Testing with a larger workload can be a burden on your systems, which can increase your cost

Since Python tends to be a comparatively slower language than others, it requires more CPU power to simulate the traffic and also imposes limits on the number of traffic that can be simulated.

On the upside, since you’re the one writing the code, you can have granular control over the testing scenarios.

Your Current Workflow

Speedsale can be used with your CICD workflow easily and the web UI dashboard makes it easy to keep tabs on performance at a glance.

Locust also has a web-based interface where you can run tests and monitor them in real-time. However, the dashboard isn’t very user-friendly. You can choose not to use the web UI and just use Locust as a library, which is better for CI/CD.

Conclusion

Picking the right performance testing tool can be a bit challenging, but hopefully this article has provided you with insights into what Locust and Speedscale can each provide.

If you want to have a managed performance testing solution dedicated specifically to Kubernetes, and you don’t want to write a single line of code for the tests, check out Speedscale. You’ll get a simple and user-friendly web UI where you can take a look at the performance and metrics in real time and check responses for each request. The simplicity makes Speedscale an easy addition to your CI/CD workflow.

Locust is great if your team is familiar with Python and you want to have granular control over your test scenarios. It’s flexible enough for your CI/CD workflow if you’re willing to put in the maintenance. Note that even though Locust supports distributed testing, each manager and worker instance must have its own copy of the test scripts. The UI only runs on the manager instance, which can make Locust even harder to manage.