It’s no longer theoretical - artificial intelligence has left research labs and entered production systems, generating a new breed of consumers - autonomous and intelligent agents. These autonomous AI agents are increasingly interacting with real-world APIs (application programming interfaces), which are sets of protocols and tools for building and integrating software applications. APIs play a crucial role in software development, enabling multi-step workflows to accomplish complex tasks, often with minimal or no human oversight.

Unlike traditional human-driven traffic, AI-driven requests can be unpredictable, bursty, and often orchestrated by multiple agent workflows simultaneously. These requests can expose unexpected security vulnerabilities, create performance bottlenecks, and challenge conventional rate-limiting and authentication strategies. Tokens and API keys are commonly used to authorize and identify each API call made by AI agents, ensuring proper access control and request validation.

Unfortunately, the reality is that many APIs are not designed for this new reality, often requiring human intervention to identify patterns to ascertain what exactly is happening under the hood when these complex and coordinated requests arise. API endpoints serve as critical communication points between systems and, if not properly secured, can become sources of vulnerabilities or performance issues.

Today, we’re going to dive into how AI agents actually work and why their bursty behavior demands a fresh approach to testing and monitoring. We’ll explore how Speedscale enables teams to simulate complex, agent-driven traffic in realistic scenarios, and you’ll learn how to prepare your APIs for the next wave of traffic patterns, ensuring reliability, security, and cost efficiency. As you prepare, it’s important to evaluate available tools that help define agent functionality, roles, and interactions to better manage and secure your systems.

Understanding AI Agent Traffic

AI systems often appear very human-like due to their Natural Language Processing and reasonably powerful decision-making capabilities. Still, they are not exactly like humans yet - they have some distinct ways of doing things, they generate specific types of traffic, and ultimately, they work in very different ways from human agents. Modeling realistic social behaviors is crucial for simulating human-like interactions and emergent patterns within complex systems.

While AI agents can adapt and improve their effectiveness over time, developing advanced AI agents that can handle complex tasks and interactions can be computationally expensive, requiring significant computing resources.

The development and analysis of AI agents is a key area of research within computer science, involving reasoning, planning, architecture, and performance evaluation.

What Are AI Agents – And How Do AI Agents Work?

AI agents are at the forefront of modern software systems, designed to operate autonomously or semi-autonomously in a variety of environments. These agents leverage advanced technologies such as natural language processing and machine learning to interpret user input, analyze data, and adapt their behavior over time. The way AI agents work is rooted in their ability to perceive their environment—whether that’s a digital system, a stream of user requests, or a complex network of services—and make decisions that help them achieve specific goals.

In practice, AI agents process vast amounts of data, identify patterns, and execute actions that can range from automating repetitive tasks to assisting with complex, multi-step workflows. For example, an AI agent might monitor system performance, respond to user queries, or optimize business processes by learning from past interactions. This adaptability allows AI agents to continuously improve their effectiveness, making them invaluable in contexts where both speed and accuracy are critical.

By integrating seamlessly with software systems, AI agents can enhance user experiences, streamline operations, and tackle complex tasks that would be too time-consuming or error-prone for humans alone. Their ability to operate within diverse environments and respond dynamically to changing conditions is what sets them apart as a transformative force in today’s digital landscape.

What Are AI Agents - And How Do AI Agents Work?

AI agents are software systems that use artificial intelligence and machine learning to perform tasks autonomously. They range from simple reflex agents, which are driven by predefined rules to perform a particular and limited function, to advanced goal-based and utility-based agents that maintain an internal model of the world, learn from past interactions, and make rational decisions to complete complex workflows. Historically, agents and applications often interacted with traditional APIs such as the Java API, which provided application programming interfaces for Java-based systems before the rise of modern web APIs.

ALT: AI is great when you need to automate routine tasks - but this automation has only grown more complex over time, resulting in human-like functionality and complex traffic patterns.

Below are just a few of the common types of AI agents you might find in the wild. The following are key features of common AI agent types. Keep in mind that this is an evolving industry, and as such, the models of today will vary distinctly from what is to come.

- Simple Reflex Agents: Operate within the confines of simple tasks using condition-action rules (e.g., if endpoint A returns 200, then call endpoint B). These are most often used for repetitive tasks, as they represent a significant cost savings over human users.

- Model-Based Reflex Agents: Maintain a simplified representation of system state and adapt actions accordingly. These are rational agents, but they are limited to specific tasks that utilize machine learning techniques to address complex problems.

- Goal-Based Agents: Act to achieve specific objectives, such as completing a financial transaction or updating records. These AI Agents analyze a variety of situational variables and adjust their agent function and intents per the stated goal. These are highly useful in more complex environments, especially when they leverage external tools or other external systems outside of the agentic control schema.

- Utility-Based Agents: Evaluate multiple possible actions against a utility function to choose the most valuable outcome. These are a bit more flexible compared to other autonomous agents in terms of finding the “optimal outcome”, as they are designed to reason through what serves the best utility for a given goal. This allows them to act autonomously as human agents might.

- Learning Agents: Continuously refine their internal model through feedback and reinforcement, improving performance over time. These models heavily rely on the ability of Large Language Models, or LLMs, to work with custom-trained sets that can be updated, thereby integrating AI agents and their learnings into a more cohesive and intelligent generative AI solution. In many cases, these systems are composed of hierarchical agents operating in dynamic environments, adapting over time to new information and context to create more proactive and effective systems.

These agents can exist as individual systems, but the most effective AI-driven system coordinates and connects multiple AI agents into a unified agent program, where different agents collaborate or compete to fulfill broader goals.

Some good examples of this approach include self-driving car fleets communicating with traffic management APIs, as well as customer management systems where chatbots and recommendation engines interact with personalization endpoints.

Key Differences Between Human Traffic and Artificial Intelligence Traffic

While this is all well and good, the main question here is around API traffic. So what does this traffic look like, and how does it compare to agentic traffic? AI-driven traffic diverges from human patterns in several critical ways:

- High Request Volume: Agents can generate thousands of requests per second, far exceeding the typical human usage rate. This high request volume can also be quite opaque, originating from many agents while seemingly coming from just a single request entry point and user identity.

- Bursty Behavior: Multiple agents may sync on external signals (e.g., market data, stock price changes), leading to sudden request spikes.

- Non-Uniform Patterns: Agents may loop through endpoints in non-linear sequences, testing edge cases that humans rarely trigger.

- Dynamic Adaptation: Learning agents adjust strategies in real time, reacting to API responses and adapting request flows continuously.

- Cross-Service Workflows: Agents often span several microservices and third-party APIs, creating complex dependencies and integration points. In these scenarios, agents frequently interact with multiple APIs, which can result in intricate dependencies that require robust management solutions.

One of the main benefits of REST APIs is their ability to efficiently handle high volumes and diverse patterns of agent-driven traffic, including scenarios involving multiple APIs.

These characteristics introduce challenges around rate limiting, authentication, data privacy, and error handling that cannot be fully addressed by conventional performance tests or manual QA. Multi-agent systems can bundle these challenges together, deploying agents and entering the data system in ways that might seem random or overly complex due to the obfuscation of both the AI models and their goals.

Understanding APIs

APIs, or Application Programming Interfaces, are the backbone of modern software systems, enabling seamless communication and data exchange between different applications and services. In the software development process, APIs play a crucial role by allowing developers to connect disparate software services, automate workflows, and build scalable, interoperable systems. By exposing well-defined interfaces, APIs make it possible for software systems to share data and functionality, driving innovation and efficiency across industries.

For developers, understanding how APIs work is essential. APIs define the rules and protocols for how software components interact, making it easier to integrate new features, connect with external systems, and deliver robust solutions that meet evolving business needs. As software systems become more complex, the importance of APIs in orchestrating data flows and service interactions only continues to grow.

API Fundamentals and Modern API Ecosystems

At their core, APIs are defined by a set of fundamental principles that guide their design, documentation, and usage. Every API consists of endpoints—specific URLs or addresses where requests are sent and responses are received. These endpoints define how clients interact with the API, specifying the types of requests (such as GET, POST, PUT, DELETE) and the expected response formats, often in JSON or XML.

Modern web APIs have become the standard for enabling communication between clients and servers, typically using HTTP or HTTPS protocols. RESTful APIs and GraphQL APIs are especially popular due to their flexibility, scalability, and ease of integration. REST APIs organize resources and actions in a predictable way, while GraphQL allows clients to request exactly the data they need, reducing overhead and improving performance.

Comprehensive API documentation is a key feature of successful API ecosystems. Good documentation provides developers with clear instructions on how to use each endpoint, what parameters are required, and what responses to expect. This not only accelerates the development process but also reduces errors and ensures that APIs can be easily adopted and maintained over time.

Why APIs Are Attractive Targets for AI Agents?

APIs are particularly appealing to AI agents because they offer a standardized, programmatic way to interact with software systems and services. Through APIs, AI agents can capture traffic, analyze real world conditions, and replay traffic to test how systems respond under various scenarios. This ability to interact directly with APIs allows AI agents to automate a wide range of tasks, from simple data retrieval to orchestrating complex workflows across multiple services.

By leveraging APIs, AI agents can identify patterns in user behavior, optimize system performance, and adapt to changing user expectations. The captured traffic provides valuable insights into how systems behave in real world usage, enabling AI agents to make informed decisions and continuously improve their capabilities. As a result, APIs serve as a critical bridge between AI agents and the broader ecosystem of software systems, unlocking new possibilities for automation, analysis, and intelligent decision-making.

Real-World Applications of AI Agents

AI agents are making a tangible impact across a wide range of industries, transforming how organizations deliver services and interact with users. In the real world, these agents are deployed to automate routine tasks, provide personalized recommendations, and deliver real-time support, all while learning from past interactions to enhance their effectiveness.

The ability of AI agents to adapt to different environments and respond to user needs in real time makes them invaluable in sectors where agility and responsiveness are essential. Whether it’s streamlining customer service operations, optimizing logistics, or supporting decision-making in complex environments, AI agents are helping organizations unlock new levels of efficiency and user satisfaction.

Industry Use Cases and Success Stories

Industry leaders across healthcare, finance, and other sectors are harnessing the power of AI agents to drive innovation and improve outcomes. In healthcare, AI agents analyze real production traffic—such as patient data and clinical workflows—to identify health risks and recommend personalized treatments. Financial institutions use AI agents to monitor production traffic for signs of fraud, manage complex investment portfolios, and provide tailored financial advice to users.

These success stories highlight how AI agents, when trained on real world data and integrated with business processes, can handle complex tasks that were once the domain of human experts. By capturing and replaying production traffic, organizations can ensure their AI agents are prepared to meet user expectations, maintain high system performance, and deliver reliable, high-quality services. The result is a new standard for efficiency, accuracy, and user satisfaction across industries.

Risks of APIs Unprepared for the Agent AI Revolution

Now that we understand how this traffic pattern differs, let’s examine what unprepared APIs might encounter in this ongoing data access and agentive revolution. As part of a comprehensive software testing strategy, performance testing—including stress testing and load testing—is essential to simulate real-world conditions, identify bottlenecks, and address potential vulnerabilities before deployment.

Performance Bottlenecks and Cost Overruns

Without realistic testing, bursty AI traffic can overwhelm infrastructure, causing:

- Thundering Herd Effects: Thousands of agents hitting the same endpoint simultaneously, leading to cascading failures. This is especially true when systems aren’t aware of other agents in the flow, resulting in poor cache requests, overloaded load balancing, and duplicate yet semi-randomized behavior patterns (e.g., repeatedly hitting the same endpoints while requesting different information).

- Auto-Scaling Delays: Cloud environments may take minutes to add capacity, during which latency and error rates spike. Custom agents might be more focused on accomplishing their assigned tasks than giving clear signals, so once they complete tasks, you might be left with an oversized network facing a steep drop in traffic with already set scaling costs.

- Unexpected Cost Spikes: Pay-as-you-go infrastructures bill per request and bandwidth, resulting in significant unbudgeted expenses when you don’t expect AI agents to hit the network so voraciously.

Security Vulnerabilities

While performance and cost overruns are significant issues, agent-driven traffic can also expose critical security weaknesses:

- Authentication Bypass: Automated workflows may inadvertently expose endpoints that lack proper token validation. An agent’s ability to ascertain the intent of the developer is typically secondary to accomplishing their goal, so limits and restrictions that might be inferred by human agents are often ignored by agentic implementations.

- Privilege Escalation: Complex workflows can circumvent role-based access controls if not thoroughly tested and reviewed. Building AI agents requires a significant amount of abstraction and reasoning, and this abstraction can lead to unintentional escalation. Furthermore, this is not to mention the very real risk of adversarial AI implementations exploiting this exact weakness.

- Data Exfiltration: Malicious or misconfigured agents could extract sensitive data at scale. Using AI Agents for malicious purposes is a growing cottage industry. While we consider our current AI-to-API flow in terms of welcoming users, we can’t forget that many unwelcome users also represent significant risks to our systems.

- Rate-Limit Scraping: Agents can fine-tune request timing to evade rate limits, launching stealthy attacks or circumventing limitations. Model-based agent workflows are much more concerned with reaching a stated goal or delivering on a stated metric - at best, these systems might be naive as to your limitations and accidentally push too far, and at worst, they might actively circumvent what they can to get the job done.

Reliability and Data Integrity Issues

Complex agent interactions can degrade reliability and data integrity:

- Race Conditions: Simultaneous requests may trigger update conflicts in databases or versioning systems.

- State Inconsistencies: Asynchronous agents may leave systems in inconsistent states if error paths aren’t handled.

- Incomplete Workflows: Agents expecting specific response formats or status codes may break when APIs evolve.

Simulating AI Agents with Speedscale

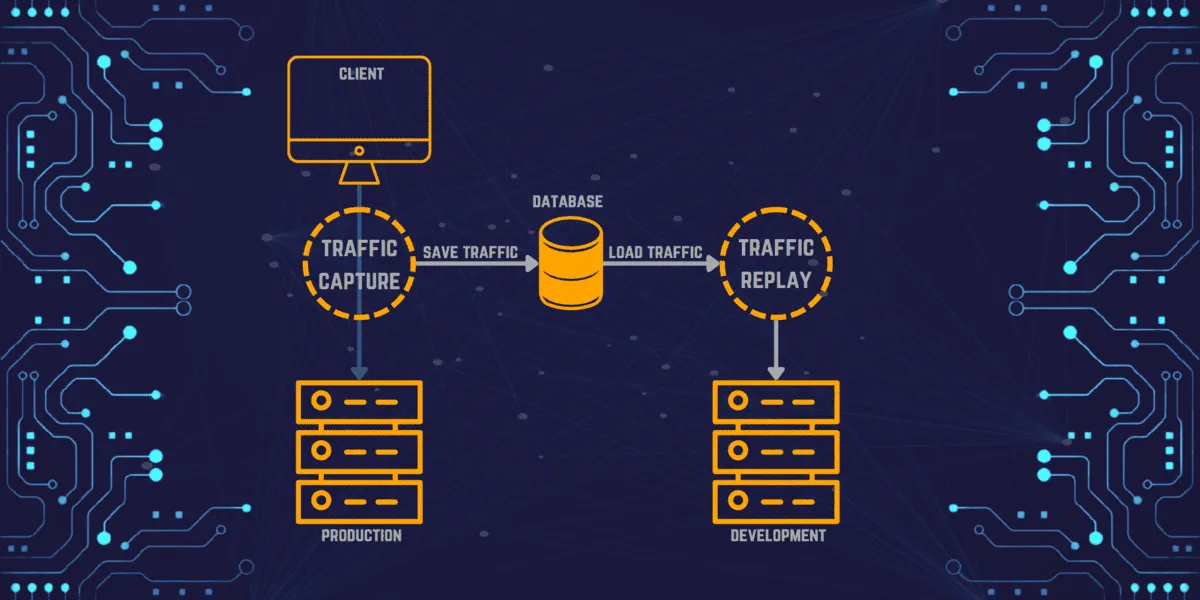

The reality is that this AI traffic is not going away - if anything, it will only grow in the coming years. Accordingly, API providers must proactively adjust their strategies to get ahead of these concerns. Speedscale’s simulation-driven approach enables teams to capture real production traffic and replay it against test environments, which can be used to significant effect to emulate AI agent behavior at scale and before any of the very real AI traffic becomes unmanageable. As a traffic replay tool, Speedscale captures and replays real user traffic for realistic stress testing, helping teams validate system performance under production-like conditions.

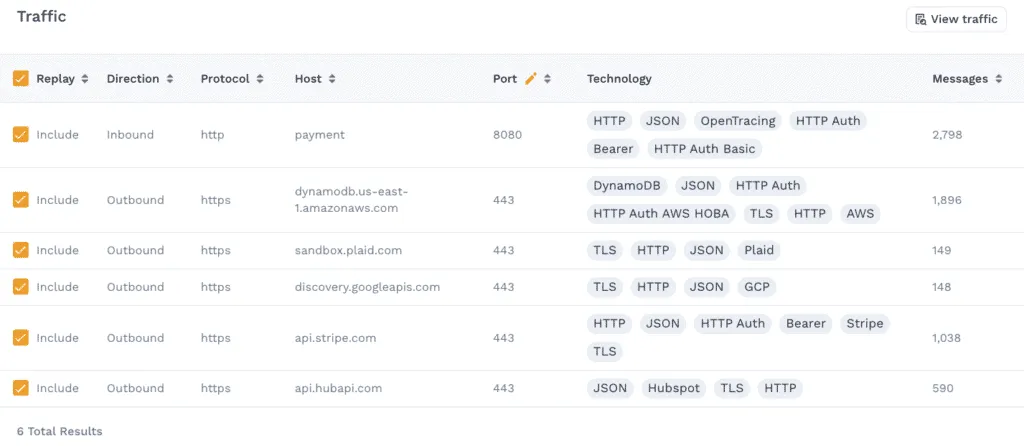

When simulating agentic patterns, Speedscale enables teams to monitor and analyze api calls to identify hidden issues and optimize API management. This approach helps uncover potential bottlenecks and security vulnerabilities before they impact live systems.

Integrating traffic replay and scenario-based testing with broader software testing practices ensures comprehensive validation of system performance and reliability.

Traffic Capture and Replay

Speedscale works by offering two distinct phases in the data flow:

- Capture: Record production or representative agent-driven traffic, including headers, payloads, and timing patterns.

- Replay: Inject the captured traffic into staging or pre-prod environments, preserving business, concurrency, and sequence of calls.

Teams can use the captured data to create realistic test cases that validate system performance under authentic usage patterns.

By replicating realistic agent patterns, such as goal-based loops or model-based decision flows, you can use captured data and replay to reveal hidden performance and security issues before they reach production.

Here are just a few things that Speedscale can unlock for your services.

Scenario-Based Testing

Define test scenarios for different agent types:

- Routine Tasks: Simulate utility-based agents performing repetitive data queries or batch updates.

- Complex Workflows: Emulate goal-driven agents orchestrating multi-step transactions across microservices. Some scenarios may involve interactions with public APIs, which can introduce additional security and access considerations.

- Adaptive Learning: Model agents that alter request rates based on response latencies or error codes, testing feedback loops.

- Multi-Agent Coordination: Replay simultaneous sessions from dozens or hundreds of agents to surface contention problems.

Using real traffic data helps ensure that test scenarios accurately reflect actual user behavior and system usage.

These scenarios help engineering teams validate that their APIs handle AI-driven traffic patterns effectively, without relying on manual scripting or brittle test suites.

Security and Compliance Testing

Incorporate security-focused scenarios:

- Unauthorized Access Attempts: Replay captured tokens from expired or malformed sessions to confirm authentication enforcement.

- Edge-Case Data Exposure: Validate that sensitive fields (e.g., PII) remain masked under all request flows.

- Rate-Limit Evasion: Test if agents can bypass thresholds via concurrency or timing variations.

Security testing should also cover different types of APIs, including those based on remote procedure calls (RPC) and simple object access protocol (SOAP), as each presents unique security challenges.

Combining performance and security tests within a single framework ensures a comprehensive assessment of API resilience against AI-driven threats.

Agentic Loop Validation and Testing

If you know that AI traffic is going to hit your service due to your observed traffic patterns, consider implementing A/B testing to figure out the best way to handle that data. The problem with implementing this in production is that AI systems will likely pick up on your changes and adjust their behavior accordingly.

By testing in isolation with observed traffic, you can determine how to abstract these changes and balance them behind the scenes, thereby reducing the observability of your system to AI agents. This approach also allows teams to quickly fix issues identified during stress testing before they impact production systems. From here, you can then test real production traffic against your new designs, and over time, capture more data for even more A/B testing.

This creates a multi-stage testing regimen where you are ahead of the AI agents due to the separation you have made, without losing any of the clarity that such separation might generate in a typical production/testing dichotomy.

Best Practices for Agent-Ready APIs

Outside of Speedscale’s excellent solution, there are some effective best practices you can start aligning against today to make your services better equipped for the AI revolution. Keep in mind that best practices may vary depending on whether you are working with a web API or other types of APIs.

Design for Burstiness

- Asynchronous Processing: Utilize message queues or event streams to handle non-critical tasks and absorb peaks.

- Circuit Breakers: Implement patterns to fail fast and avoid overloading downstream services.

- Backoff and Retry Logic: Ensure clients (including agents) handle 429/503 codes gracefully with exponential backoff and retry logic.

Strengthen Authentication and Authorization

- Token Scoping: Issue tokens with minimal scopes required for each agent role (aka implement a least privilege modality).

- Dynamic Rate Limits: Utilize adaptive rate-limiting strategies that are based on client identity and request type.

- Real-Time Monitoring: Alert on anomalous request patterns indicative of misbehaving agents or attacks.

Implement Observability and Feedback Loops

- Distributed Tracing: Tag agent sessions with unique identifiers to trace cross-service workflows.

- Detailed Logging: Record request and response metadata, including timing, status codes, and payload sizes.

- Performance Dashboards: Monitor latency percentiles, error rates, and throughput under simulated AI load.

Automated Testing in CI/CD

- Compliance-as-Code: Define agent scenarios in version-controlled test suites, subject to PR reviews.

- Pre-Deployment Gatekeepers: Block merges when replay tests reveal performance or security regressions.

- Scheduled Regression Tests: Run agent workloads nightly or weekly to catch drift from evolving traffic patterns.

Conclusion

AI agents represent a powerful evolution in how applications interact with APIs, but their unpredictable and bursty nature introduces unique risks. Traditional performance tests and security audits often fall short when faced with adaptive, multi-agent traffic patterns.

Speedscale bridges this gap by enabling the realistic capture and replay of AI-driven workloads, scenario-based testing, and integrated security validations. By designing APIs to handle agentic bursts, strengthening authentication, and embedding simulation tests into CI/CD pipelines, teams can ensure reliability, maintain data integrity, and optimize costs.

Are you ready for AI agents to test your API’s mettle? Start simulating agentic traffic with Speedscale today and safeguard your services against the next frontier of automated interactions!